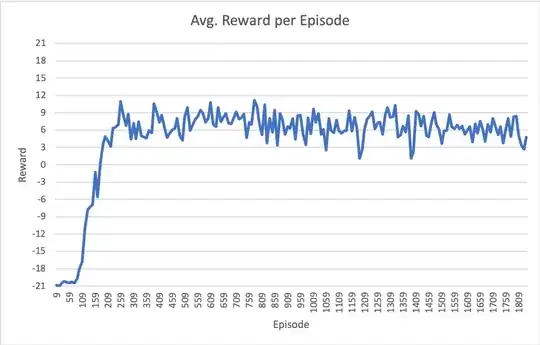

I am in the process of implementing the DQN model from scratch in PyTorch with the target environment of Atari Pong. After a while of tweaking hyper-parameters, I cannot seem to get the model to achieve the performance that is reported in most publications (~ +21 reward; meaning that the agent wins almost every volley).

My most recent results are shown in the following figure. Note that the x axis is episodes (full games to 21), but the total training iterations is ~6.7 million.

The specifics of my setup are as follows:

Model

class DQN(nn.Module):

def __init__(self, in_channels, outputs):

super(DQN, self).__init__()

self.conv1 = nn.Conv2d(in_channels=in_channels, out_channels=32, kernel_size=8, stride=4)

self.conv2 = nn.Conv2d(in_channels=32, out_channels=64, kernel_size=4, stride=2)

self.conv3 = nn.Conv2d(in_channels=64, out_channels=64, kernel_size=3, stride=1)

self.fc1 = nn.Linear(in_features=64*7*7 , out_features=512)

self.fc2 = nn.Linear(in_features=512, out_features=outputs)

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.relu(self.conv2(x))

x = F.relu(self.conv3(x))

x = x.view(-1, 64 * 7 * 7)

x = F.relu(self.fc1(x))

x = self.fc2(x)

return x # return Q values of each action

Hyperparameters

- batch size: 32

- replay memory size: 100000

- initial epsilon: 1.0

- epsilon anneals linearly to 0.02 over 100000 steps

- random warmstart episodes: ~50000

- update target model every: 1000 steps

- optimizer = optim.RMSprop(policy_net.parameters(), lr=0.0025, alpha=0.9, eps=1e-02, momentum=0.0)

Additional info

- OpenAI gym Pong-v0 environment

- Feeding model stacks of 4 last observed frames, scaled and cropped to 84x84 such that only the "playing area" is visible.

- Treat losing a volley (end-of-life) as a terminal state in the replay buffer.

- Using smooth_l1_loss, which acts as Huber loss

- Clipping gradients between -1 and 1 before optimizing

- I offset the beginning of each episode with 4-30 no-op steps as the papers suggest

Has anyone had a similar experience of getting stuck around 6 - 9 average reward per episode like this?

Any suggestions for changes to hyperparameters or algorithmic nuances would be greatly appreciated!