I'm trying to replicate the DeepMind paper results, so I implemented my own DQN. I left it training for more than 4 million frames (more than 2000 episodes) on SpaceInvaders-v4 (OpenAI-Gym) and it couldn't finish a full episode. I tried two different learning rates (0.0001 and 0.00125) and seems to work better with 0.0001, but the median score never raises above 200. I'm using a double DQN. Here is my code and some photos of the graphs I'm getting each session. Between sessions I'm saving the network weights; I'm updating the target network every 1000 steps. I can't see if I'm doing something wrong, so any help would be appreciated. I'm using the same CNN construction as the DQN paper.

Here's the action selection function; it uses a batch of 4 80x80 processed experiences in grayscale to select the action (s_batch means for state batch):

def action_selection(self, s_batch):

action_values = self.parallel_model.predict(s_batch)

best_action = np.argmax(action_values)

best_action_value = action_values[0, best_action]

random_value = np.random.random()

if random_value < AI.epsilon:

best_action = np.random.randint(0, AI.action_size)

return best_action, best_action_value

Here is my training function. It uses the past experiences as training; I tried to implement that if it lose any life, it wouldn't get any extra rewards, so in theory, the agent would try to not die:

def training(self, replay_s_batch, replay_ns_batch):

Q_values = []

batch_size = len(AI.replay_s_batch)

Q_values = np.zeros((batch_size, AI.action_size))

for m in range(batch_size):

Q_values[m] = self.parallel_model.predict(AI.replay_s_batch[m].reshape(AI.batch_shape))

new_Q = self.parallel_target_model.predict(AI.replay_ns_batch[m].reshape(AI.batch_shape))

Q_values[m, [item[0] for item in AI.replay_a_batch][m]] = AI.replay_r_batch[m]

if np.all(AI.replay_d_batch[m] == True):

Q_values[m, [item[0] for item in AI.replay_a_batch][m]] = AI.gamma * np.max(new_Q)

if lives == 0:

loss = self.parallel_model.fit(np.asarray(AI.replay_s_batch).reshape(batch_size,80,80,4), Q_values, batch_size=batch_size, verbose=0)

if AI.epsilon > AI.final_epsilon:

AI.epsilon -= (AI.initial_epsilon-AI.final_epsilon)/AI.epsilon_decay

replay_s_batch it's a batch of (batch_size) experience replay states (packs of 4 experiences), and replay_ns_batch it's full of 4 next states. The batch size is 32.

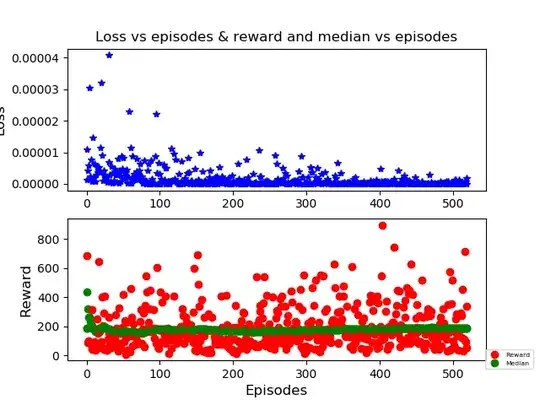

And here are some results, after training:

In blue, the loss (I think it's correct; it's near-zero). Red dots are the different match scores (as you can see, it does sometimes really good matches). In green, the median (near 190 in this training, with learning rate = 0.0001)

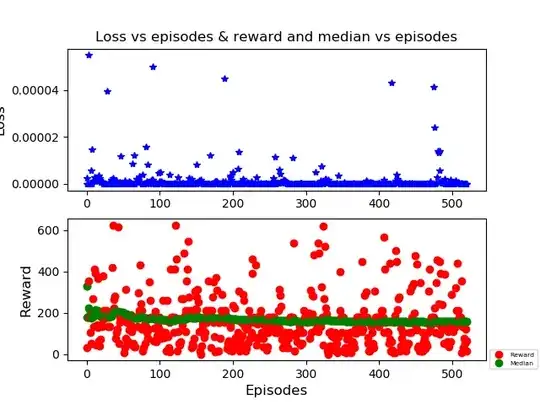

Here is the last training, with lr = 0.00125; the results are worse (it's median it's about 160). Anyway the line it's almost straight, I don't see any variation in any case.

So anyone can point me to the right direction? I tried a similar approach with pendulum and it worked properly. I know that with Atari games it takes more time but a week or so I think it's enough, and it seems to be stuck.

In case someone need to see another part of my code just tell me.

Here is the last training, with lr = 0.00125; the results are worse (it's median it's about 160). Anyway the line it's almost straight, I don't see any variation in any case.

So anyone can point me to the right direction? I tried a similar approach with pendulum and it worked properly. I know that with Atari games it takes more time but a week or so I think it's enough, and it seems to be stuck.

In case someone need to see another part of my code just tell me.

Edit: With the suggestions provided, I modified the action_selection function. Here it is:

def action_selection(self, s_batch):

if np.random.rand() < AI.epsilon:

best_action = env.action_space.sample()

else:

action_values = self.parallel_model.predict(s_batch)

best_action = np.argmax(action_values[0])

return best_action

To clarify my last edit: with action_values you get the q values; with best_action you get the action which corresponds to the max q value. Should I return that or just the max q value?