$\pi(s)$ does not mean $q(s,a)$ here. $\pi(s)$ is a policy that represents probability distribution over action space for a specific state. $q(s,a)$ is a state-action pair value function that tells us how much reward do we expect to get by taking action $a$ in state $s$ onwards.

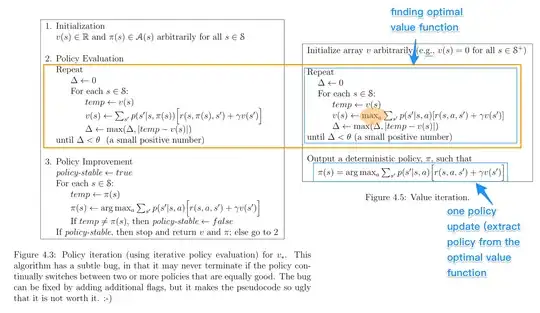

For the value iteration on the right side with this update formula:

$v(s) \leftarrow \max_\limits{a} \sum_\limits{s'}p(s'\mid s, a)[r(s, a, s') + \gamma v(s')]$

we have an implicit greedy deterministic policy that updates value of state $s$ based on the greedy action that gives us the biggest expected return. When the value iteration converges to its values based on greedy behaviour after $n$ iterations we can get the explicit optimal policy with:

$\pi(s) = \arg \max_\limits{a} \sum_\limits{s'} p(s'\mid s, a)[r(s, a, s') + \gamma v(s')]$

here we are basically saying that the action that has highest expected reward for state $s$ will have probability of 1, and all other actions in action space will have probability of 0

For the policy evaluation on the left side with this update formula:

$v(s) \leftarrow \sum_\limits{s'}p(s'\mid s, \pi(s))[r(s, \pi(s), s') + \gamma v(s')]$

we have an explicit policy $\pi$ that is not greedy in general case in the beginning. That policy is usually randomly initialized so the actions that it takes will not be greedy, it means we can start with policy that takes some pretty bad actions. It also does not need to be deterministic but I guess in this case it is. Here we are updating value of state $s$ according to the current policy $\pi$.

After policy evaluation step ran for $n$ iterations we start with the policy improvement step:

$\pi(s) = \arg \max_\limits{a} \sum_\limits{s'} p(s'\mid s, a)[r(s, a, s') + \gamma v(s')]$

here we are greedily updating our policy based on the values of states that we got through policy evaluation step. It is guaranteed that our policy will improve but it is not guaranteed that our policy will be optimal after only one policy improvement step. After improvement step we do the evaluation step for new improved policy and after that we again do the improvement step and so on until we converge to the optimal policy