The equation $\frac12(x_1w_1 + x_2w_2 - y)^2$ is called the $Error (E)$ (assuming $y$ to be continuous which is not the case in case of classifiers). If you write this equation in Physics or Maths it represents a family of curves in 4D (the curves are continuous but for visualisation we will assume it to be a family of curves).

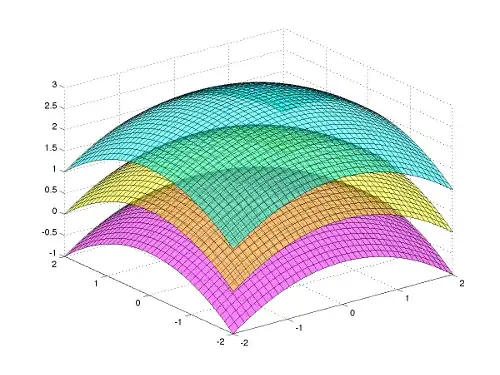

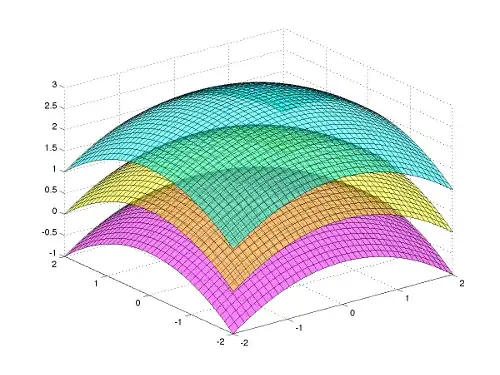

Here is a representative equation of what it would have looked like had the error been $\frac12(x_1w_1 - y)^2$ a 3D curve.

This is a scalar quantity which represents the value of error at different places for different values of $w1$ and $w2$. Now gradient of a scalar is defined as $\nabla F$, where $F$ is a scalar, on doing this operation you get a vector, which is perpendicular to the equi-potential or more suitably equi-error surface, i.e. if you trace all the points which give the same error, you will get a curve, and its gradient at any point is the vector perpendicular to the curve at that given point. There are many proofs for this but here is a very simple and nice proof.

Now lets look at the equation of the constraint $x_1w_1 + x_2w_2 = y$. In case of a 3D error curve, the constraint is giving us a plane which is parallel to the tangential plane of the equi-error surface at a given point. You can look at this method of how to find tangential planes and derive the plane yourself, where $z = Error(E)$ and $w1$ and $y$ are your $x$ and $y$.

Thus it is quite clear that the gradient will be perpendicular to the constraint, and this is the reason we use gradients because according to mathematics if you move in a direction perpendicular to an equi-potential surface you get the maximum change than any other direction for same $dl$ movement.

I would highly suggest you check out these videos on gradient from Khan academy. This will hopefully give you a more intuitive understanding of why we do what we do in Neural Networks.