I've pondered this for a while without developing an intuition for the math behind the cause of this.

So what causes a model to need a low learning rate?

I've pondered this for a while without developing an intuition for the math behind the cause of this.

So what causes a model to need a low learning rate?

Gradient Descent is a method to find the optimum parameter of the hypothesis or minimize the cost function.

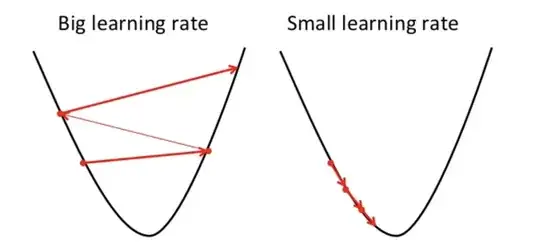

If the learning rate is high then it can overshoot the minimum and can fail to minimize the cost function.

hence result in a higher loss.

Since Gradient descent can only find local minimum so, the lower learning rate may result in bad performance. To do so, it is better to start with the random value of the hyperparameter can increase model training time but there are advanced methods such as adaptive gradient descent can manage the training time.

There are lots of optimizer for the same task but no optimizer is perfect. It depends on some factors

PS. It is always better to go with different rounds of gradient descent