Visualization Appreciated

The diagrams are nicely thought out. As you refine your comparative design visualizations, you might use something like Inkscape to draw them for web publication, whether or not you decide to submit a paper to a publisher or license your ideas Creative Commons.

Web Research Realities

The reliability of answers from the internet are a function of the author, how much time she or he took in researching the question themselves, what search terms are used, and where the search term is entered. This question falls under the more challenging to research, cases where the must reliable answers are in the results sets of experiments run in proprietary government and corporate research centers.

If the GM or Tesla or Toyota or DoD research results were posted on line, someone would likely be fired, sued, and possibly jailed and a team of lawyers would hunt down all references to the posted information, employing international agreements and backbone level content filters to eliminate the dissemination of secrets.

A Better Research Approach

We can determine with a fairly high confidence that a decision based on a single frame is much less likely to lead to collision avoidance, beginning with a simple thought experiment.

Two kids are playing creatively and decide to make up a game that involves a ball. One of the rules of the game is that each player can only run backward, not forward or sideways. That's what makes the same fun for these kids. It's silly because everyone wants to run forward to get to the ball. The rest of the rules of the game are not particularly relevant.

A machine driver is processing an image and goes to make the decision. In this case, the term decision indicates the use of a rules based system, whether fuzzy and probabilistic or of the former pure Boolean type, but that is not particularly relevant either. This thought experiment applies to a learned response arising from the training of an architecture built primarily of artificial networks as well.

Consider now the case where no decision or learned response is invoked on the basis of the direction of the ball travel or its proximity to the street and path of the vehicle being driven because the kids are facing away from the ball. It would not be reasonable to assume that the training data included this spontaneously made-up game. The result of the combination of child creativity and single frame selection leads to a delayed collision avoidance tactic, at best.

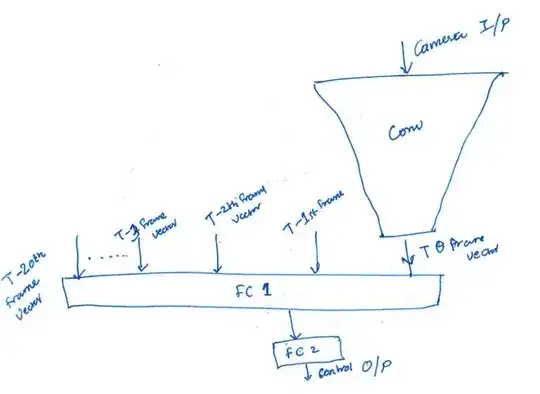

In contrast, if two or three frames are included in the analysis, the feature of the ball moving toward the street and the children, regardless of their orientation with respect to the ball and the street, may be detected as a feature of the entire system through which the vehicle is driving.

This is one of astronomical number of examples where the training without the temporal dimension will lead to a much higher likelihood of improper trajectory projection from pixel data on the basis of any reasonable training set than had training and use of the pixel data included the temporal dimension.

Mathematical Analysis

When the results of trials with real vehicles move from the domain of corporate intellectual property and the domain of governmental national security artifacts into the public domain, we will see our empirical evidence. Until then we can rest on theory. The above thought experiment and others like it can be represented. Consider the hypothesis of

$$ P_{\mathcal{A} = \mathcal{E}(S)} > P_{\mathcal{A} = \mathcal{E}(\vec{S})} \; \text{,}$$

where $P_c$ is the probability given condition $c$, $\mathcal{A}$ is the actuality (posteriori) and $\mathcal{E}$ is the expectation function applied to instantaneous sensory information $s$ on the left hand side of the inequality versus the recent history of instantaneous sensory information $\vec{s}$ on the right hand side.

If we bring intermediate actions (decisioning after each frame is acquired) into the scope of this question, then we may pose the hypothesis in a way that involves Markov's work on causality and prediction in chains of events.

The accuracy of decisioning based on optical acquisition in the context of collision avoidance is higher without the Markov property, in that historical optically acquired data in conjunction with new optically acquired data will produce better trajectory oriented computational collision avoidance results than without historical data.

Either of these might take considerable work to prove, but they are both likely to be provable in probabilistic terms with a fairly reasonable set of mathematical constraints placed on the system up front. We know this because the vast majority of thought experiences show an advantage to a vector of frames over a single frame for the determination of actions that are most likely to avoid a collision.

Design

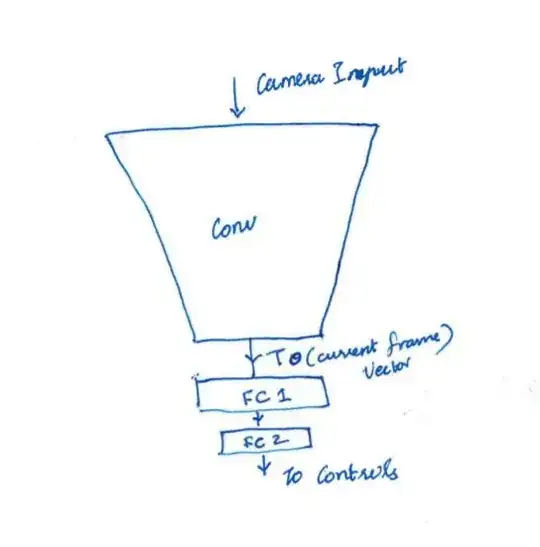

As is often the case, convolution kernel use in a CNN is likely to be the best design to recognize edge, contour, reflectivity, and texture features in collide-able object detection.

Assembly of trajectories (as a somewhat ethereal internal intermediate result) and subsequent determination of beeping, steering, acceleration, breaking, or signaling is likely best handled by recurrent networks of some type, the most publicly touted being b-LSTM or GRU based networks. The attention based handling and preemption discussed in many papers regarding real time system control are among the primary candidates for eventual common use among the designs. This is because changes in focus is common during human driving operations and is even detectable in birds and insects.

The simple case is when an ant detects one of these.

- A large insurmountable object

- A predator

- A morsel of good smelling food

- Water

The mode of behavior switches, probably in conjunction with the neural pathways for sensory information, when a preemptive stimulus is detected. Humans pilot and drive aircraft and motor vehicles this way too. When you pilot or drive next, bring into consciousness what you have learned unconsciously and this preemptive detection and change of attention and focus of task will become obvious.