Apologies for the lengthy title. My question is about the weight update rule for logistic regression using stochastic gradient descent.

I have just started experimenting on Logistic Regression. I came across two weight update expressions and did not know which one is more accurate and why they are different.

The first Method:

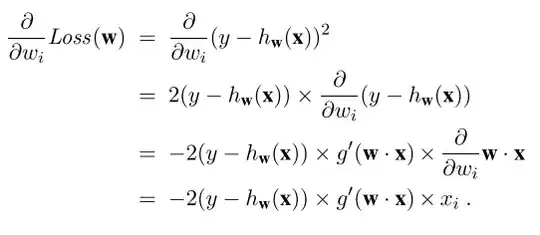

Source: (Book) Artificial Intelligence: A Modern Approach by Norvig, Russell on page 726-727: using the L2 loss function:

where g stands for the logistic function g' stands for g's derivative w stands for weight hw(x) represents the logistic regression hypothesis

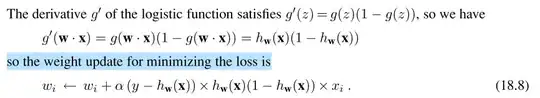

The other method:

Source (Paper authored by Charles Elkan): Logistic Regression and Stochastic Gradient Training.

can be found here