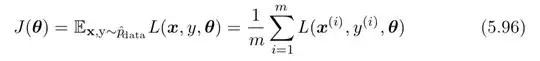

In chapter 5 of Deep Learning book of Ian Goodfellow, some notations in the loss function as below make me really confused.

I tried to understand $x,y \sim p_{data}$ means a sample $(x, y)$ sampled from original dataset distribution (or $y$ is the ground truth label). The loss function in formula 5.101 seems to be correct for my understanding. Actually, the formula 5.101 is derived from 5.100 by adding the regularization.

Therefore, the notation $x,y \sim \hat{p}_{data}$ in formula 5.96 and 5.100 is really confusing to me whether the loss function is defined correctly (kinda typo error or not). If not so, could you help me to refactor the meaning of two notations, are they similar and correct?