Reference managers like Zotero or Mendeley allow researchers to categorize papers into hierarchical categories called collections. The User navigates through a listing of these collections when filing a new item. The retrieval time grows something like the logarithm of the number of collections; looking for a collection can quickly become a nuisance.

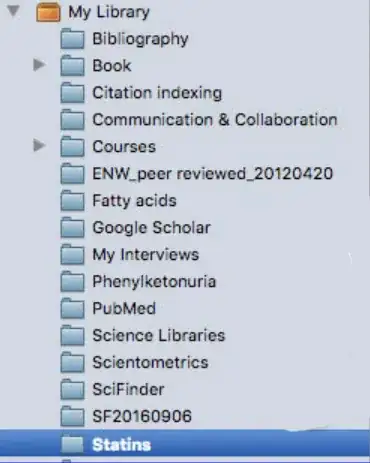

Fig 1. A top level list of collections in the Zotero reference manager

One way to reduce navigation time is to allow users to search collections by name. A complementary solution is to provide a view of the currently "hot" collections. The user may interact with a list of suggested collections, or receive relevant completions when typing into a collections search bar.

This raises a basic learning problem:

Let $K = K_1, \ \dots, \ K_m$ be the sequence of collections the user has visited (possibly augmented with visit times). Let $H_m$ be a set of $n$ collections the user is likely to visit next. How can we construct $H_m$?

A technique that does this this might exploit a few important features of this domain:

- Project Clusters: Users jump between collections relevant to their current projects

- Collection Aging: Users tend to focus on new collections, and forget older ones

- Retrieval Cost: There's a tangible cost to the user (time, distraction) when navigating collections; this applies to the reduced view (the technique might keep $n$ as small as possible)

Two ideas so far

LIFO Cache

Reduce $K_{m-1}, K_{m-2},\ \dots$ into the first $n$ unique entries which do not match $K_m$.

This heuristic is very simple to implement and requires no learning. It encompasses clusters and aging given suitably large $n$. But with large $n$ it incurs a retrieval cost of its own.

Markov Chain/Hidden Markov Model

Use $K$ to train a MC or HMM. Build $H_m$ using estimates from this model.

The simplest version is an order $k\ $ MC transition matrix built using k-gram statistics of $K_i$. This might be sensitive to project clusters, but I don't think it will recognize new collections without a hard coded aging heuristic.

I'm not clear on how HMMs would be trained here, and I'm not very taken with the $k$-gram MC approach. My next task is to read about MCs/HMMs in context of suggestion systems.

Other Models

I am brand new to suggestion systems. Reading leads are quite welcome!

I would be especially excited about unsupervised techniques, and neural network techniques I could train on a GPU. Apart from improving Zotero, I would like this problem to give me an opportunity to learn about cutting edge techniques.

Valuable Goals

An ideal technique would cast light on questions like

- How should we measure the performance of this kind of system? (I suspect cache miss rate is a good metric, as well as the ratio between cache miss rate and cache size)

- How should we translate these human-centric performance metrics into human independent objective functions for learning?

- How much better than LIFO can we theoretically expect to do with a more sophisticated technique (say, in terms of cache size for a given cache miss rate)?

- How can a technique learn patterns like clusters and aging without hand tuned objectives?

I am interested in learning theory and building an implementation, so resources with publicly available code would be be preferable. Apart from potentially being overkill for the problem, I would not mind if the final model depends on a GPU.

Please forgive me for the long and vague question. I wish I had done more reading before posing this question, but I feel a bit stuck! I hope to get unstuck with some good reading resources. Thanks!