At a related question in Computer Science SE, a user told:

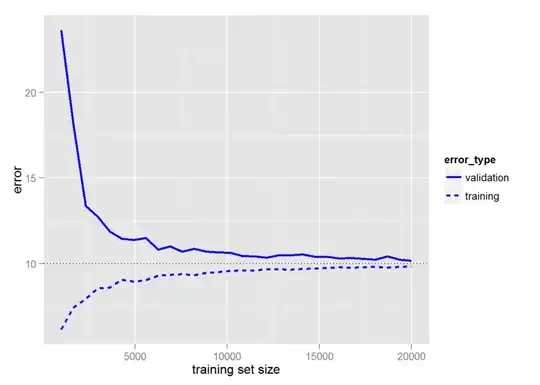

Neural networks typically require a large training set.

Is there a way to define the boundaries of the "optimal" size of a training set in the general case?

When I was learning about fuzzy logic, I've heard some rules of thumb that involved examining the mathematical composition of the problem and using that to define the number of fuzzy sets.

Is there such a method that can be applicable for an already defined neural network architecture?