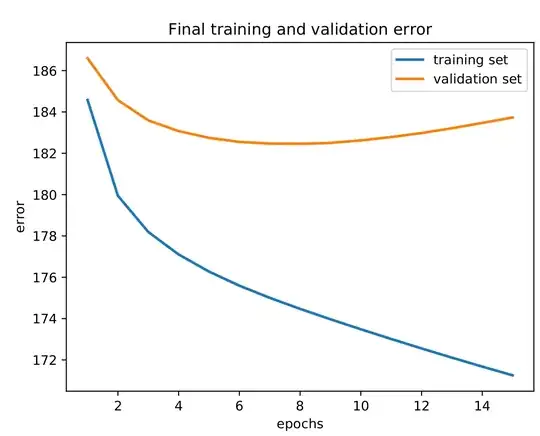

I have been executing an open-source Text-to-speech system Ossian. It uses feed forward DNNs for it's acoustic modeling. The error graph I've got after running the acoustic model looks like this:

Here are some relevant information:

Here are some relevant information:

- Size of Data: 7 hours of speech data (4000 sentences)

- Some hyper-parameters:

- batch_size : 128

- training_epochs : 15

- L2_regularization: 0.003

- batch_size : 128

Can anyone point me to the directions to improve this model? I'm assuming it is suffering from over-fitting problem? What should I do to avoid this? Increasing data? Or changing batch-size/epochs/regularization parameters? Thanks in advance.