I was watching a video about Convolutional Neural Networks: https://www.youtube.com/watch?v=SQ67NBCLV98. What I'm confused about is the arrangement of applying the filters' channels to the input image or even to the output of a previous layer.

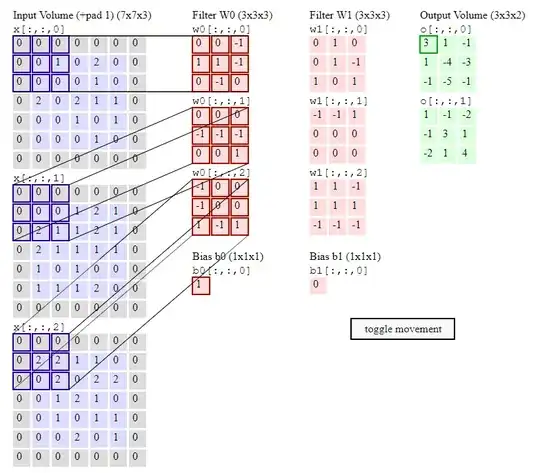

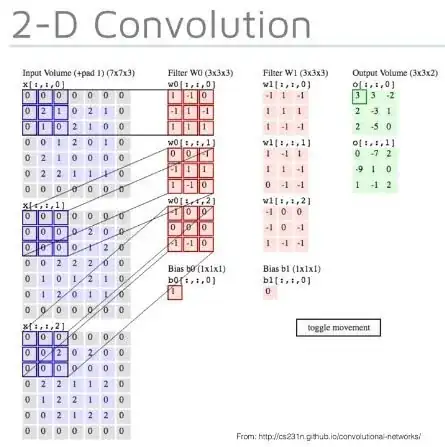

Question 1 - Looking at the visual explanation example of how one filter with 3 channels is applied to the input image (with 3 channels), so that each 1 filter channel is applied to its corresponding input channel:  .

So hence the output is 3 channels. Makes sense.

.

So hence the output is 3 channels. Makes sense.

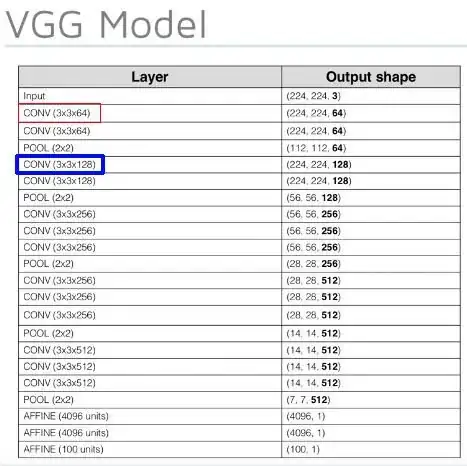

However, looking at the second screenshot which shows an example of the VGG network:  , looking at the first layer (I've delineated with a red frame), which is 64 channels, where the input of the image contains 3 channels. How does the output shape become 64? The only way I would think this would be possible is if you apply:

, looking at the first layer (I've delineated with a red frame), which is 64 channels, where the input of the image contains 3 channels. How does the output shape become 64? The only way I would think this would be possible is if you apply:

- filter channel 1 to image channel 1

- filter channel 2 to image channel 2

- filter channel 3 to image channel 3

- filter channel 4 to image channel 1

- filter channel 5 to image channel 2

- filter channel 6 to image channel 3

.. and so on.

Or the other thing could be, that these are representing Conv layers, with 64 filters. Rather than a filter with 64 channels. And that's precisely what I'm confused about here. In all the popular Convolutional networks, when we see these big numbers - 64, 128, 256 ... etc, are these Conv layers with 64 filters, or are they individual filters with 64 channels each?

Question 2 - Referring back to the second screenshot, the layer I've delineated with blue frame (3x3x128). This Conv layer, as I understand, takes the output of 64 Max-pooled nodes and applies 128 Conv filters. But how does the output become 128. If we apply each filter to each Max-pooled output node, that's 64 x 128 = 8192 channels or nodes in output shape. Clearly that's not what's happening and so I'm definitely missing something here. So, how does 128 filters is applied to 64 output nodes in a way so that the output is still 128? What's the arrangement?

Many thanks in advance.