While analyzing the data for a given problem set, I came across a few distributions which are not Gaussian in nature. They are not even uniform or Gamma distributions(so that I can write a function, plug the parameters and calculate the "Likelihood probability" and solve it using Bayes classification method). I got a set of a few absurd looking PDFs and I am wondering how should I define them mathematically so that I can plug the parameters and calculate the likelihood probability.

The set of PDFs/Distributions that I got are the following and I am including some solutions that I intend to use. Please comment on their validity:

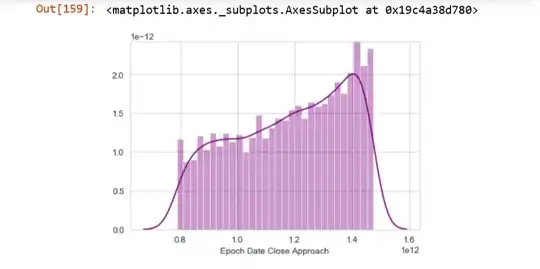

The distribution looks like:

$ y = ax +b $ from $ 0.8<x<1.5 $

How to programmatically calculate

1. The value of x where the pdf starts

2. The value of x where the pdf ends

3. The value of y where the pdf starts

4. The value of y where the pdf ends

However, I would have liked it better to have a generic distribution for this form of graphs so that I can plug the parameters to calculate the probability.

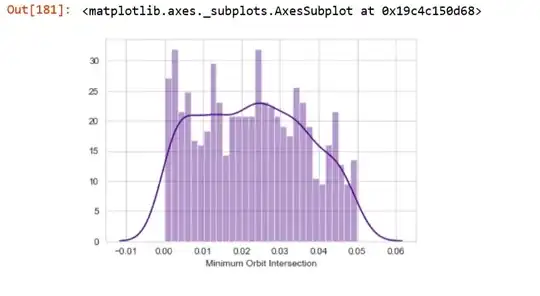

This PDF looks neither uniform nor Gaussian. What kind of distribution should I consider it roughly?

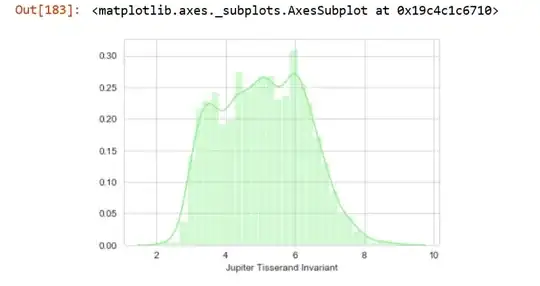

I can divide this graph into three segments. The first segment is from $2<x<3$ with a steep slope, the second segment is from $3<x<6$ with a moderate sope and the third segment is from $6<x<8$ with a high negative slope.

How to programmatically calculate

1. the values of x where the graph changes its slope.

2. the values of y where the graph changes its slope.

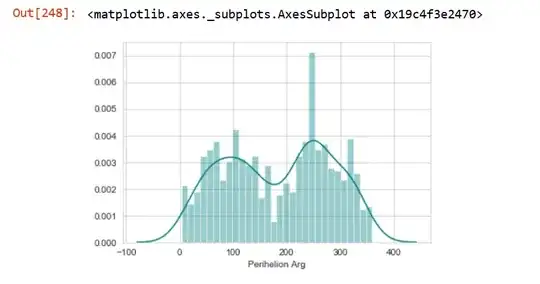

This looks like two Gaussian densities with different mean superimposed together. But then the question arises, how do we find these two individual Gaussian densities?

The following code may help:

variable1=nasa1['PerihelionArg'][nasa1.PerihelionArg>190]

variable2=nasa1['PerihelionArg'][nasa1.PerihelionArg<190]

Find mean and variance of variable1 and variable2, find the corresponding PDFs. Define the overall PDF with a suitable range of $x$.

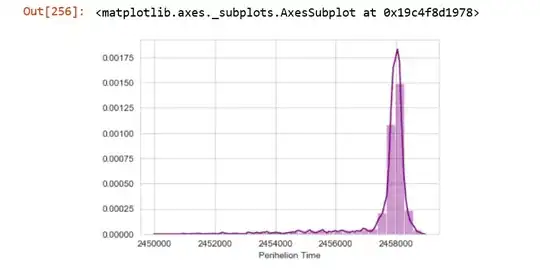

This can be estimated as a Gamma distribution. We can find the mean and variance, calculate $\alpha$ and $\beta$ and finally calculate the PDF.

It would be very helpful if someone could give their insights on the above analysis, its validity, and correctness and their suggestions regarding how problems such as these should be dealt with.