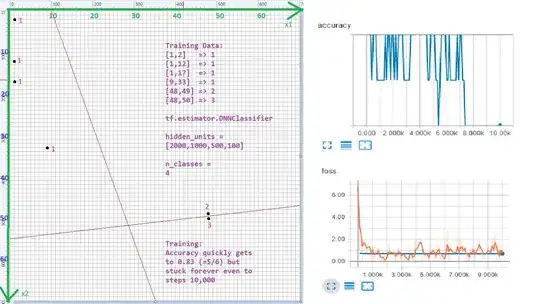

The ready-to-use DNNClassifier in tf.estimator seems not able to fit these data:

X = [[1,2], [1,12], [1,17], [9,33], [48,49], [48,50]]

Y = [ 1, 1, 1, 1, 2, 3 ]

I've tried with 4 layers but it's fitting to 83% (=5/6 sampes) only:

hidden_units = [2000,1000,500,100]

n_classes = 4

The sample data above are supposed to be separated by 2 lines (right-click image to open in new tab):

It seems stuck be cause of Y=2 and Y=3 are too close. How to change the DNNClassifier to fit to 100%?