Say I have x,y data connected by a function with some additional parameters (a,b,c):

$$ y = f(x ; a, b, c) $$

Now given a set of data points (x and y) I want to determine a,b,c. If I know the model for $f$, this is a simple curve fitting problem. What if I don't have $f$ but I do have lots of examples of y with corresponding a,b,c values? (Or alternatively $f$ is expensive to compute, and I want a better way of guessing the right parameters without a brute force curve fit.) Would simple machine-learning techniques (e.g. from sklearn) work on this problem, or would this require something more like deep learning?

Here's an example generating the kind of data I'm talking about:

import numpy as np

import matplotlib.pyplot as plt

Nr = 2000

Nx = 100

x = np.linspace(0,1,Nx)

f1 = lambda x, a, b, c : a*np.exp( -(x-b)**2/c**2) # An example function

f2 = lambda x, a, b, c : a*np.sin( x*b + c) # Another example function

prange1 = np.array([[0,1],[0,1],[0,.5]])

prange2 = np.array([[0,1],[0,Nx/2.0],[0,np.pi*2]])

#f, prange = f1, prange1

f, prange = f2, prange2

data = np.zeros((Nr,Nx))

parms = np.zeros((Nr,3))

for i in range(Nr) :

a,b,c = np.random.rand(3)*(prange[:,1]-prange[:,0])+prange[:,0]

parms[i] = a,b,c

data[i] = f(x,a,b,c) + (np.random.rand(Nx)-.5)*.2*a

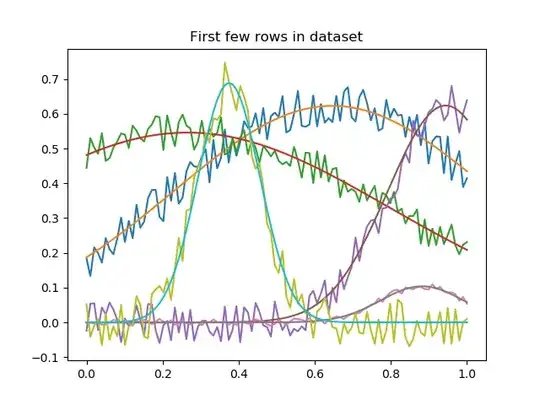

plt.figure(1)

plt.clf()

for i in range(3) :

plt.title('First few rows in dataset')

plt.plot(x,data[i],'.')

plt.plot(x,f(x,*parms[i]))

Given data, could you train a model on half the data set, and then determine the a,b,c values from the other half?

I've been going through some sklearn tutorials, but I'm not sure any of the models I've seen apply well to this type of a problem. For the guassian example I could do it by extracting features related to the parameters (e.g. first and 2nd moments, %5 and .%95 percentiles, etc.), and feed those into an ML model that would give good results, but I want something that would work more generally without assuming anything about $f$ or its parameters.