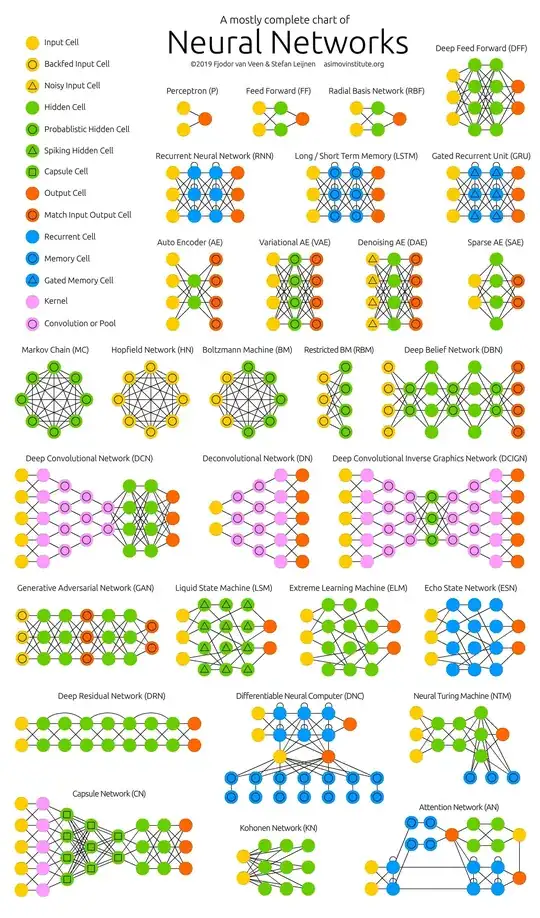

I agree that this is too broad, but here's a 1 sentence answer for most of them. The ones I left out (from the bottom of the chart) are very modern, and very specialized. I don't know much about them, so perhaps someone who does can improve this answer.

- Perceptron: Linear or logistic-like regression (and thus, classification).

- Feed Forward: Usually non-linear regression or classification with sigmoidal activation. Essentially a multi-layer perceptron.

- Radial Basis Network: Feed Forward network with Radial Basis activation functions. Used for classification and some kinds of video/audio filtering

- Deep Feed Forward: Feed Forward with more than 1 hidden layer. Used to learn more complex patterns in classification or regression, maybe reinforcement learning.

- Recurrent Neural Network: A Deep Feed Forward Network where some nodes connect to past layers. Used in reinforcement learning, and

to learn patterns in sequential data like text or audio.

- LSTM: A recurrent neural network with specialized control neurons (sometimes called gates) that allow signals to be remembered for longer periods of time, or selectively forgotten. Used in any RNN application, and often able to learn sequences that have a very long repetition time.

- GRU: Much like LSTM, another kind of gated RNN with specialized control neurons.

- Auto Encoder: Learns to compress data and then decompress it. After learning this model, it can be split into two useful subparts: a mapping from the input space to a low-dimensional feature space, that may be easier to interpret or understand; and a mapping from a small dimensional subspace of simple numbers into complex patterns, which can be used to generate those complex patterns. Basis of much modern work in vision, language, and audio processing.

- VAE, DAE,SAE: Specializations of the Auto Encoder.

- Markov Chain: A neural network representation of a markov chain: State is encoded in the set of neurons that are active, and

transition probabilities are thus defined by the weights. Used for

learning transition probabilities and unsupervised feature learning

for other applications.

- HN, BM, RBM, DBM: Specialized architectures based on the Markov Chain idea, used to automatically learn useful features for other

applications.

Deep Convolutional Network: Like a feed-forward network, but each node is really a bank of nodes learning a convolution from the layer

before it. This essentially allows it to learn filters, edge

detectors, and other patterns of interest in video and audio

processing.

Deep Deconvolutional Network: Opposite of a Convolutional Network in some sense. Learn a mapping from features that represent edges or

other high level properties of some unseen image, back to the pixel

space. Generate images from summaries.

DCIGN: Essentially an auto-encoder made of a DCN and a DN stuck together. Used to learn generative models for complex images like

faces.

Generative Adversarial Network: Used to learn generative models for complex images (or other data types) when not enough training data is

available for a DCIGN. One model learns to generate data from random

noise, and the other learns to classify the output of the first

network as distinct from whatever training data is available.