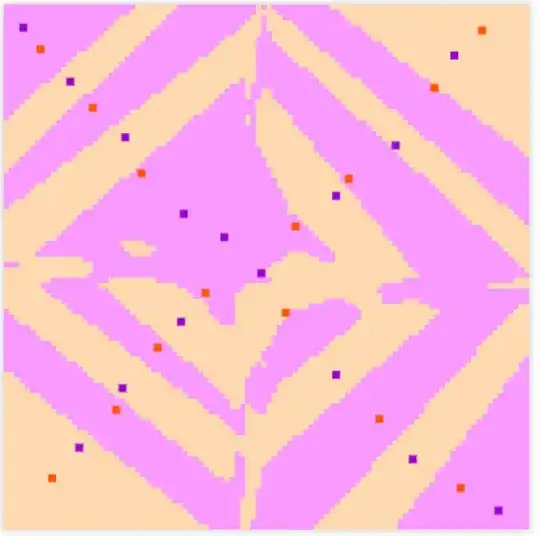

The following X-shape alternated pattern can be separated quite well and super fast by K-nearest Neighbour algorithm (go to https://ml-playground.com to test it):

However, DNN seems to face great struggles to separate that X-shape alternated data. Is it possible to do K-nearest before DNN, ie. set the DNN weights somehow to simulate the result of K-nearest before doing DNN training?

Another place to test the X-shape alternated data: https://cs.stanford.edu/people/karpathy/convnetjs/demo/classify2d.html