I have just started playing with Reinforcement learning and starting from the basics I'm trying to figure out how to solve Banana Gym with coach.

Essentially Banana-v0 env represents a Banana shop that buys a banana for \$1 on day 1 and has 3 days to sell it for anywhere between \$0 and \$2, where lower price means a higher chance to sell. Reward is the sell price less the buy price. If it doesn't sell on day 3 the banana is discarded and reward is -1 (banana buy price, no sale proceeds). That's pretty simple.

Ideally the algorithm should learn to set a high price on day 1 and reducing it every day if it didn't sell.

To start I took the coach-bundled CartPole_ClippedPPO.py and CartPole_DQN.py preset files and modified them to run Banana-v0 gym.

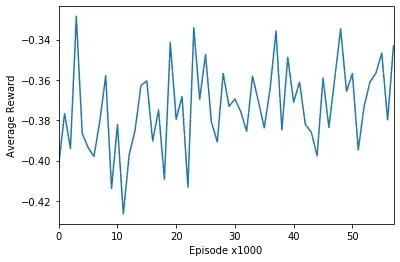

The trouble is that I don't see any learning progress regardless what I try, even after running like 50,000 episodes. In comparison the CartPole gym successfully trains in under 500 episodes.

I would have expected some improvement after 50k episodes for such a simple task like Banana.

Is it because the Banana-v0 rewards are not predictable? I.e. whether the banana sells or not is still determined by a random number (with success chance based on the price).

Where should I take it from here? How to identify which Coach agent algorithm I should start with and try to tune it?