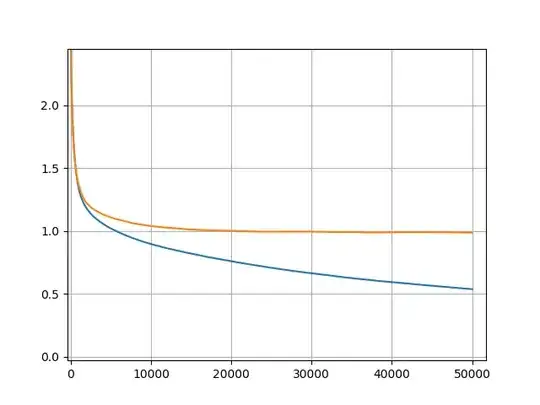

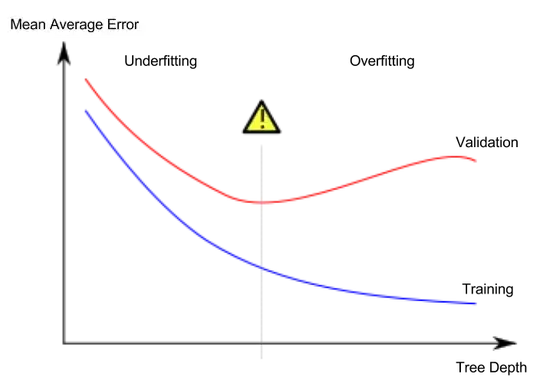

The telltale signature of overfitting is when your validation loss starts increasing, while your training loss continues decreasing, i.e.:

(Image adapted from Wikipedia entry on overfitting)

It is clear that this does not happen in your diagram, hence your model does not overfit.

A difference between a training and a validation score by itself does not signify overfitting. This is just the generalization gap, i.e. the expected gap in the performance between the training and validation sets; quoting from a recent blog post by Google AI:

An important concept for understanding generalization is the generalization gap, i.e., the difference between a model’s performance on training data and its performance on unseen data drawn from the same distribution.

An MSE of 1.0 (or any other specific value, for that matter) by itself cannot be considered "good" or "bad"; everything depends on the context, i.e. of the particular problem and the actual magnitude of your dependent variable: if you are trying to predict something that is in the order of some thousands (or even hundreds), an MSE of 1.0 does not sound bad; it's not the same if your dependent variable takes values, say, in [0, 1] or similar.