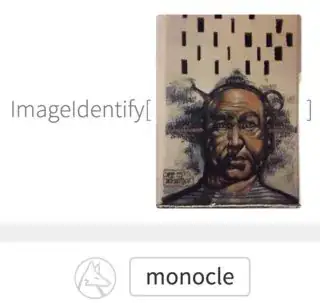

I've uploaded a picture to Wolfram's ImageIdentify of graffiti on the wall, but it recognized it as 'monocle'. Secondary guesses were 'primate', 'hominid', and 'person', so not even close to 'graffiti' or 'painting'.

Is it by design, or there are some methods to teach a convolutional neural network (CNN) to reason and be aware of a bigger picture context (like mentioned graffiti)? Currently it seems as if it's detecting literally what is depicted in the image, not what the image actually is.

This could be the same problem as mentioned here, that DNN are:

Learning to detect jaguars by matching the unique spots on their fur while ignoring the fact that they have four legs.2015

If it's by design, maybe there is some better version of CNN that can perform better?