I am practicing with Resnet50 fine-tuning for a binary classification task. Here is my code snippet.

base_model = ResNet50(weights='imagenet', include_top=False)

x = base_model.output

x = keras.layers.GlobalAveragePooling2D(name='avg_pool')(x)

x = Dropout(0.8)(x)

model_prediction = keras.layers.Dense(1, activation='sigmoid', name='predictions')(x)

model = keras.models.Model(inputs=base_model.input, outputs=model_prediction)

opt = SGD(lr = 0.01, momentum = 0.9, nesterov = False)

model.compile(loss='binary_crossentropy', optimizer=opt, metrics=['accuracy']) #

train_datagen = ImageDataGenerator(rescale=1./255, shear_range=0.2, zoom_range=0.2, horizontal_flip=False)

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

'./project_01/train',

target_size=(input_size, input_size),

batch_size=batch_size,

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(

'./project_01/val',

target_size=(input_size, input_size),

batch_size=batch_size,

class_mode='binary')

hist = model.fit_generator(

train_generator,

steps_per_epoch= 1523 // batch_size, # 759 + 764 NON = 1523

epochs=epochs,

validation_data=validation_generator,

validation_steps= 269 // batch_size) # 134 + 135NON = 269

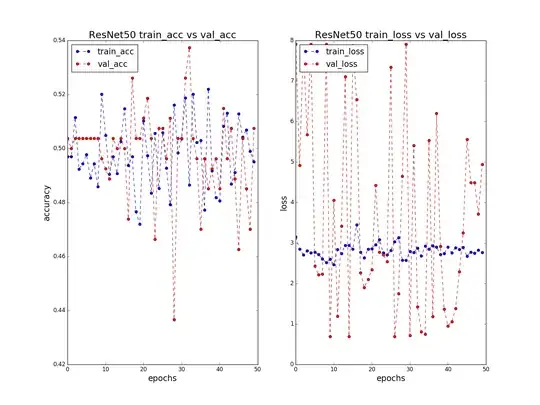

I plotted a figure of the model after training for 50 epochs:

You may have noticed that train_acc and val_acc have highly fluctuated, and train_acc merely reaches 52%, which means that network isn't learning, let alone over-fitting the data.

As for the losses, I haven't got any insights.

Before training starts, network outputs:

Found 1523 images belonging to 2 classes.

Found 269 images belonging to 2 classes.

Is my fine-tuned model learning anything at all?

I'd appreciate if someone can guide me to solve this issue.