The description of feature selection based on a random forest uses trees without pruning. Do I need to use tree pruning? The thing is, if I don't cut the trees, the forest will retrain.

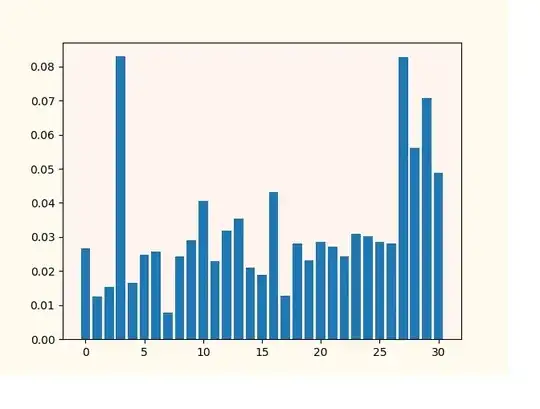

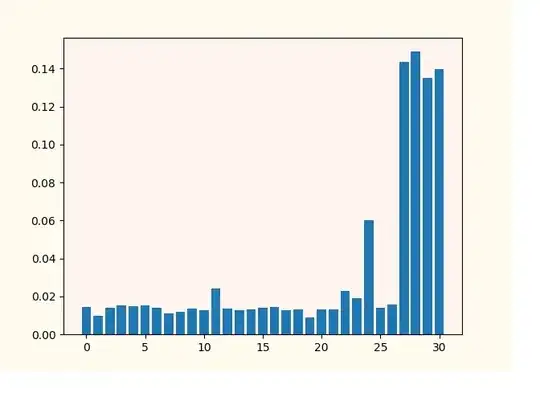

Below in the picture is the importance of features based on 500 trees without pruning.

I always use the last four signs 27, 28, 29, 30. And I try to add to them signs from 0 to 26 by means of cycles, going through possible combinations. Empirically, I assume that the trait number 0, 26 is significant. But, on both pictures it is not visible. Although the quality of classification with the addition of 0, 26 has improved.