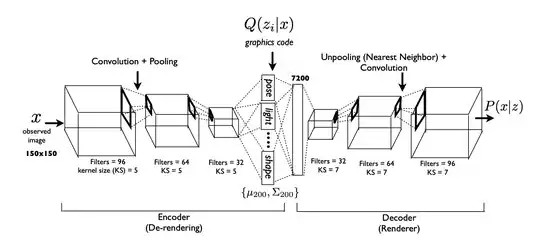

This post refers to Fig. 1 of a paper by Microsoft on their Deep Convolutional Inverse Graphics Network:

https://www.microsoft.com/en-us/research/wp-content/uploads/2016/11/kwkt_nips2015.pdf

Having read the paper, I understand in general terms how the network functions. However, one detail has been bothering me: how does the network decoder (or "Renderer") generate small scale features in the correct location as defined by the graphics code? For example, when training the dataset on faces, one might train a single parameter in the graphics code to control the (x,y) location of a small freckle. Since this feature is small, it will be "rendered" by the last convolutional layer where the associated kernels are small. What I don't understand is how the information of the location of the freckle (in the graphics code) propagates through to the last layer, when there are many larger-scale unpooling + convolutional layers in-between.

Thanks for the help!