This should make a difference, but how big is the difference heavily depends on your task. However, generally speaking, a smaller batch size will have a lower speed if counted in sample/minutes, but have a higher speed in batch/minutes. If the batch size is too small, the batch/minute will be very low and therefore decreasing training speed severely. However, a batch size too small (for example 1) will make the model hard to generalize and also slower to converge.

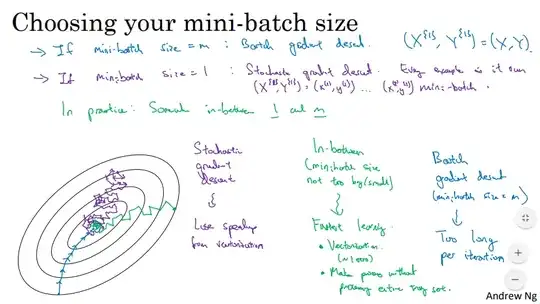

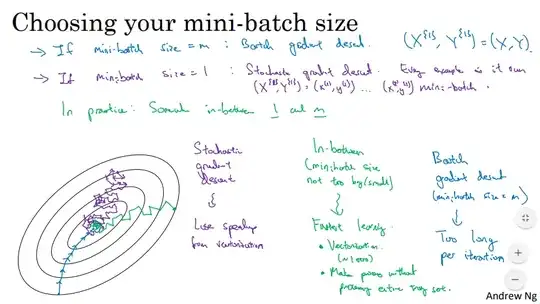

This slide (source) is a great demonstration of how batch size affects training.

As you can see from the diagram, when you have a small batch size, the route to convergence will be ragged and not direct. This is because the model may train on an outlier and have its performance decrease before fitting again. Of course, this is an edge case and you would never train a model with 1 batch size.

On the other hand, with a batch size too large, your model will take too long per iteration. With at least a decent batch size (like 16+) the number of iterations needed to train the model is similar, so a larger batch size is not going to help a lot. The performance is not going to vary a lot.

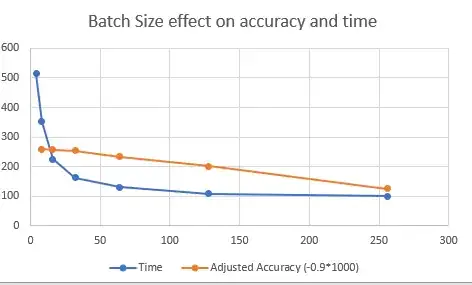

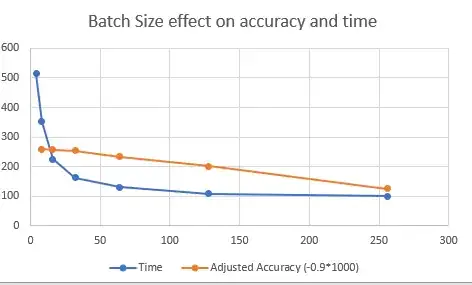

In your case, the accuracy will make a difference but only minimally. Whilst writing this answer, I have run a few tests on batch size effect on performance and time, and here are the results. (Results to be added for 1 batch size)

Batch size 256 Time required 98.50849771499634s : 0.9414

Batch size 128 Time required 108.53689193725586s : 0.9668

Batch size 64 Time required 129.92272853851318s : 0.9776

Batch size 32 Time required 162.13709354400635s : 0.9844

Batch size 16 Time required 224.82269191741943s : 0.9854

Batch size 8 Time required 351.2729814052582s : 0.9861

Batch size 4 Time required 514.2667407989502s : 0.9862

Batch size 2 Time required 829.1623721122742s : 0.9869

You can test out yourself in this Google Colab.

As you can see, the accuracy increases while the batch size decreases. This is because a higher batch size means it will be trained on fewer iterations. 2x batch size = half the iterations, so this is expected. The time required has risen exponentially, but the batch size of 32 or below doesn't seem to make a large difference in the time taken. The accuracy seems to be normal as half the iterations are trained with double the batch size.

In your case, I would actually recommend you stick with 64 batch size even for 4 GPU. In the case of multiple GPUs, the rule of thumb will be using at least 16 (or so) batch size per GPU, given that, if you are using 4 or 8 batch size, the GPU cannot be completely utilized to train the model.

For multiple GPU, there might be a slight difference due to precision error. Please, see here.

Conclusion

The batch size doesn't matter to performance too much, as long as you set a reasonable batch size (16+) and keep the iterations not epochs the same. However, training time will be affected. For multi-GPU, you should use the minimum batch size for each GPU that will utilize 100% of the GPU to train. 16 per GPU is quite good.