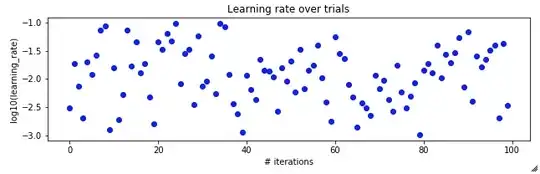

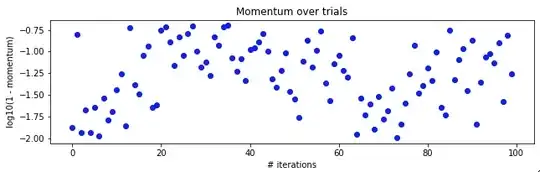

I've been trying out bayesian hyperparameter optimisation (with TPE) on a simple CNN applied to the MNIST handwritten digit dataset. I noticed that over iterations of the optimisation loop, the tested parameters appear to oscillate slowly.

I won't add a graph, but the batch size is also sampled from one of 32, 64, or 128. Also note that I did this with a fixed 10 epochs in each trial.

I understand that we'd expect the trialled parameters to converge gradually towards the optimal, but why the longer term movement of the average?

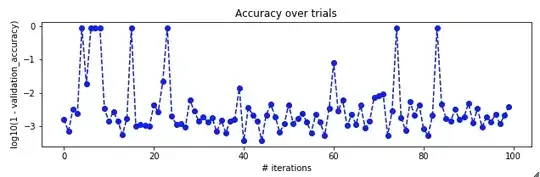

For context here is the score (1 - accuracy) over iterations

And also for context, here's the architecture of the CNN.

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_1 (Conv2D) (None, 26, 26, 32) 320

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 13, 13, 32) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 11, 11, 64) 18496

_________________________________________________________________

conv2d_3 (Conv2D) (None, 9, 9, 64) 36928

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 4, 4, 64) 0

_________________________________________________________________

flatten_1 (Flatten) (None, 1024) 0

_________________________________________________________________

dense_1 (Dense) (None, 100) 102500

_________________________________________________________________

dense_2 (Dense) (None, 10) 1010

=================================================================

Optimization done with mini-batch gradient descent on the cross entropy.