I was wondering if it's possible to get the inverse of a neural network. If we view a NN as a function, can we obtain its inverse?

I tried to build a simple MNIST architecture, with the input of (784,) and output of (10,), train it to reach good accuracy, and then inverse the predicted value to try and get back the input - but the results were nowhere near what I started with. (I used the pseudo-inverse for the W matrix.)

My NN is basically the following function:

$$ f(x) = \theta(xW + b), \;\;\;\;\; \theta(z) = \frac{1}{1+e^{-z}} $$

I.e.

def rev_sigmoid(y):

return np.log(y/(1-y))

def rev_linear(z, W, b):

return (z - b) @ np.linalg.pinv(W)

y = model.predict(x_train[0:1])

z = rev_sigmoid(y)

x = rev_linear(z, W, b)

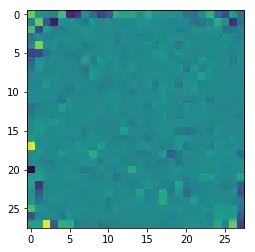

x = x.reshape(28, 28)

plt.imshow(x)

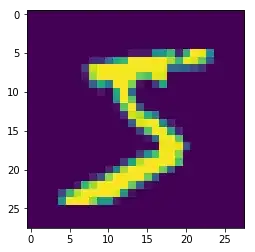

^ This should have been a 5:

Is there a reason why it failed? And is it ever possible to get inverse of NN's?

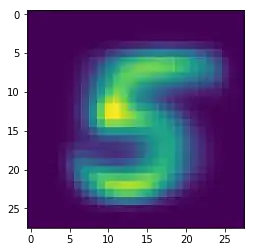

EDIT: it is also worth noting that doing the opposite does yield good results. I.e. starting with the y's (a 1-hot encoding of the digits) and using it to predict the image (an array of 784 bytes) using the same architecture: input (10,) and output (784,) with a sigmoid. This is not exactly equivalent, to an inverse as here you first do the linear transformation and then the non-linear. While in an inverse you would first do (well, undo) the non-linear, and then do (undo) the linear. I.e. the claim that the 784x10 matrix is collapsing too much information seems a bit odd to me, as there does exist a 10x784 matrix that can reproduce enough of that information.