I'm working on a continuous state / continuous action controller. It shall control a certain roll angle of an aircraft by issuing the correct aileron commands (in $[-1, 1]$).

To this end, I use a neural network and the DDPG algorithm, which shows promising results after about 20 minutes of training.

I stripped down the presented state to the model to only the roll angle and the angular velocity, so that the neural network is not overwhelmed by state inputs.

So it's a 2 input / 1 output model to perform the control task.

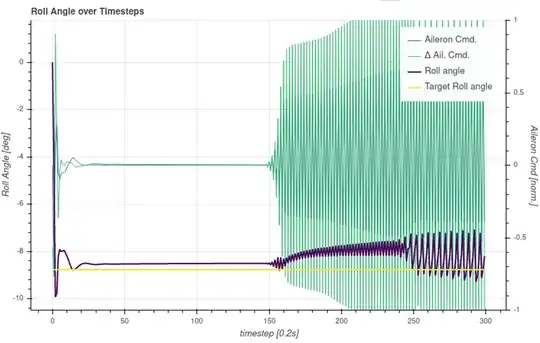

In test runs, it looks mostly good, but sometimes, the controller starts thrashing, i.e. it outputs flittering commands, like in a very fast bangbang-controlm which causes a rapid movement of the elevator.

Even though this behavior kind of maintains the desired target value, this behavior is absolutely undesirable. Instead, it should keep the output smooth. So far, I was not able to detect any special disturbance that starts this behavior. Yet it comes out of the blue.

Does anybody have an idea or a hint (maybe a paper reference) on how to incorporate some element (maybe reward shaping during the training) to avoid such behavior? How to avoid rapid actuator movements in favor of smooth movements?

I tried to include the last action in the presented state and add a punishment component in my reward, but this did not really help. So obviously, I do something wrong.