I'm training a deep network in Keras on some images for a binary classification (I have around 12K images). Once in a while, I collect some false positives and add them to my training sets and re-train for higher accuracy.

I split my training into 20/80 percent for training/validation sets.

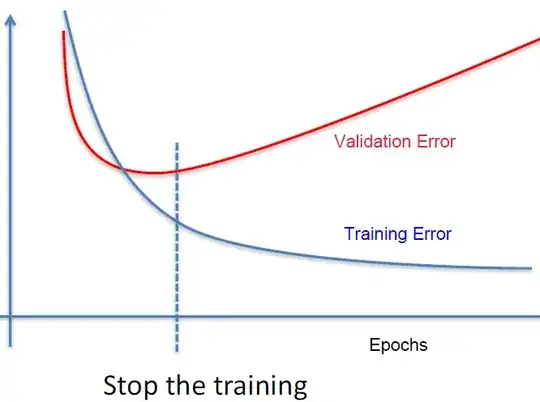

Now, my question is: which resulting model should I use? Always the one with higher validation accuracy, or maybe the higher mean of training and validation accuracy? Which one of the two would you prefer?

Epoch #38: training acc: 0.924, validation acc: 0.944

Epoch #90: training acc: 0.952, validation acc: 0.932