I'm new to Speech Synthesis & Deep Learning. Recently, I got a task as described below:

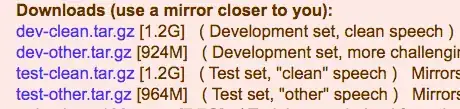

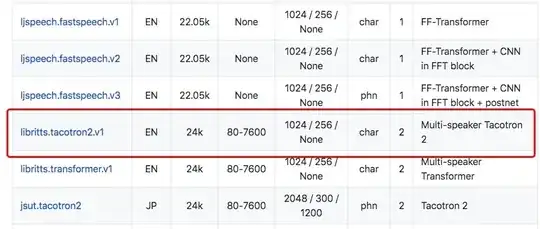

I have problem in training a multi-speaker model which should be created by Tacotron2. And I was told I can get some ideas from espnet, which is a end-to-end audio tools library. In this way, I found a good dataset called libritts: http://www.openslr.org/60/. And it's also found at espnet: https://github.com/espnet/espnet#tts-results

Here is my initial thought:

- Download libritts corpus / Read the espnet code: ../espnet/egs/libritts/tts1/run.sh, learning how to train the libritts corpus by pytorch-backend.

Difficulty: But I cannot get it across that how the author trained a libritts.tacotron2.v1 as I didn't found anything about tacotron2 along those shells related to run.sh. Maybe He didn't make those codes open-source.

- Read the tacotron2 code and tune it into a multi-speaker network:

Difficulty: I found the code is really complex.... I just got lost in reading these codes, without a clear understanding about how to tune this model. Cause Tacotron2 was designed with a LJSpeech Dataset(only 1 person).

- Training the multi-speaker model with tiny set of dataset (http://www.openslr.org/60/) to save time.

they contains about 110 people's data, which can be enough for my scenario.

In the end:

Coud you please help me about my questions. I've been puzzled by this problem for a long time...