Given a ridge and a lasso regularizer, which one should be chosen for better performance?

An intuitive graphical explanation (intersection of the elliptical contours of the loss function with the region of constraints) would be helpful.

Given a ridge and a lasso regularizer, which one should be chosen for better performance?

An intuitive graphical explanation (intersection of the elliptical contours of the loss function with the region of constraints) would be helpful.

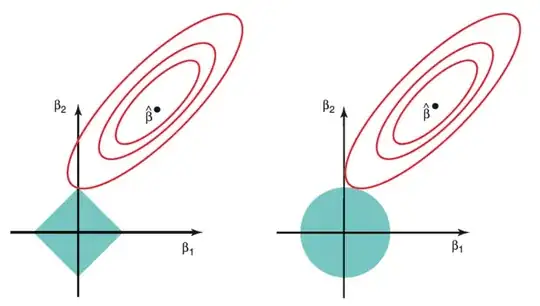

The following graph shows the constraint region (green), along with contours for Residual sum of squares (red ellipse). These are iso-lines signifying that points on an ellipse have the same RSS.

Figure: Lasso (left) and Ridge (right) Constraints [Source: Elements of Statistical Learning]

Figure: Lasso (left) and Ridge (right) Constraints [Source: Elements of Statistical Learning]

As Ridge regression has a circular constraint ($\beta_1^2 + \beta_2^2 <= d$) with no edges, the intersection will not occur on an axis, signifying that the ridge regression parameters will usually be non-zero.

On the contrary, the Lasso constraint ($|\beta_1| + |\beta_2| <= d$) has corners at each of the axes, and so the ellipse will often intersect the constraint region at an axis. In 2D, such a scenario would result in one of the parameters to become zero whereas in higher dimensions, more of the parameter estimates may simultaneously reach zero.

This is a disadvantage of ridge regression, wherein the least important predictors never get eliminated, resulting in the final model to contain all predictor variables. For Lasso, the L1 penalty forces some parameters to be exactly equal to zero when $\lambda$ is large. This has a dimensionality reduction effect resulting in sparse models.

In cases where the number of predictors are small, L2 could be chosen over L1 as it constraints the coefficient norm retaining all predictor variables.