There is this video on pythonprogramming.net that trains a network on the MNIST handwriting dataset. At ~9:15, the author explains that the data should be normalized.

The normalization is done with

x_train = tf.keras.utils.normalize(x_train, axis=1)

x_test = tf.keras.utils.normalize(x_test, axis=1)

The explanation is that values in a range of 0 ... 1 make it easier for a network to learn. That might make sense, if we consider sigmoid functions, which would otherwise map almost all values to 1.

I could also understand that we want black to be pure black, so we want to adjust any offset in black values. Also, we want white to be pure white and potentially stretch the data to reach the upper limit.

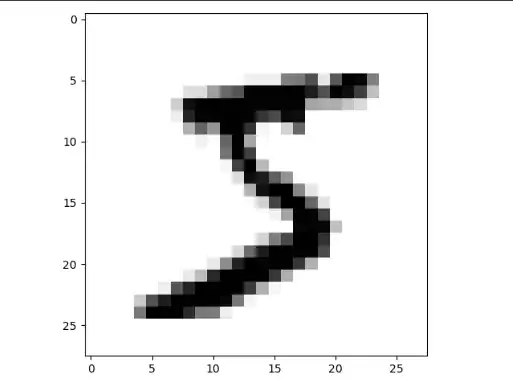

However, I think the kind of normalization applied in this case is incorrect. The image before was:

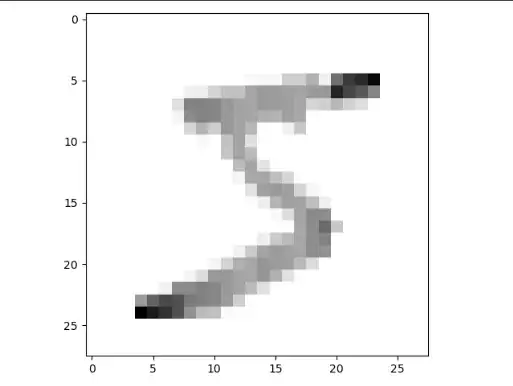

After the normalization it is

As we can see, some pixels which were black before have become grey now. Columns with few black pixels before result in black pixels. Columns with many black pixels before result in lighter grey pixels.

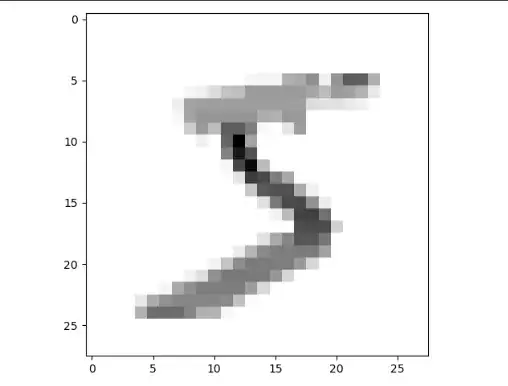

This can be confirmed by applying the normalization on a different axis:

Now, rows with few black pixels before result in black pixels. Rows with many black pixels result in lighter grey pixels.

Is normalization used the right way in this tutorial? If so, why? If not, would my normalization be correct?

What I expected was a per pixel mapping from e.g. [3 ... 253] (RGB values) to [0.0 ... 1.0]. In Python code, I think this should do:

import numpy as np

import imageio

image = imageio.imread("sample.png")

image = (image - np.min(image))/np.ptp(image)