Can vanishing gradients be detected by the change in distribution (or lack thereof) of my convolution's kernel weights throughout the training epochs? And if so how?

For example, if only 25% of my kernel's weights ever change throughout the epochs, does that imply an issue with vanishing gradients?

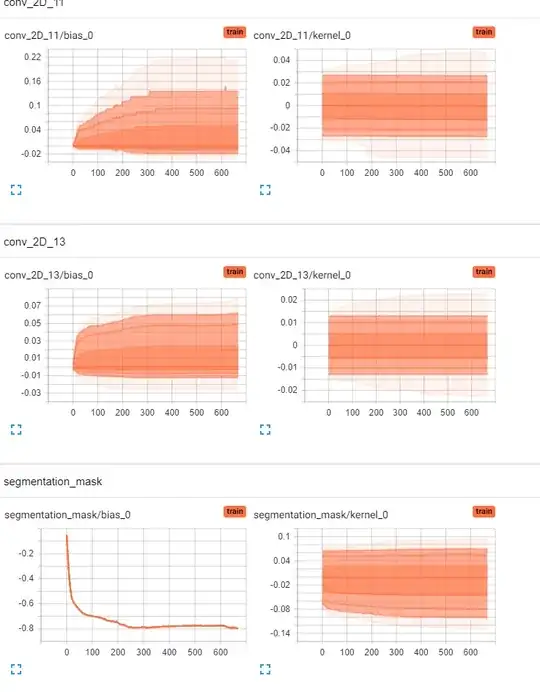

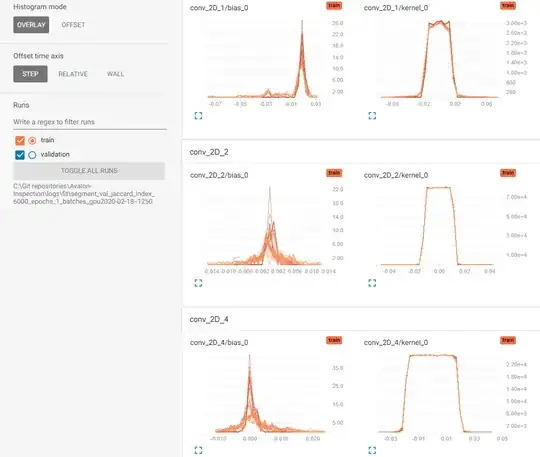

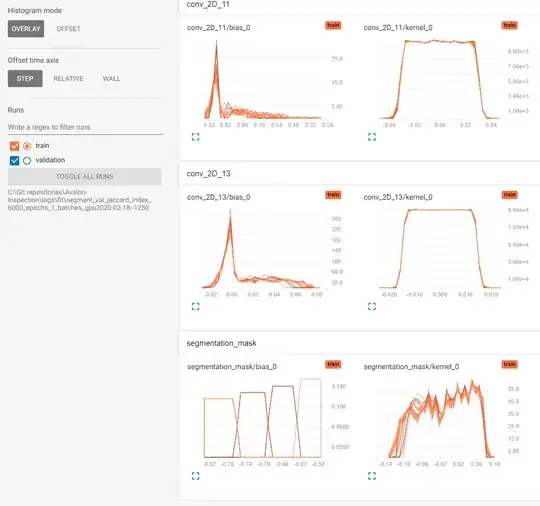

Here are my histograms and distributions, is it possible to tell whether my model suffers from vanishing gradients from these images? (some middle hidden layers omitted for brevity)