Is it be possible to train a neural network, with no parallel bilingual data, for machine translation?

Asked

Active

Viewed 135 times

1 Answers

2

In this paper: Unsupervised Machine Translation Using Monolingual Corpora Only the authors proposed a novel method.

Intuitively it is an autoencoder, but the Start Of Sentence token is set to be the language type.

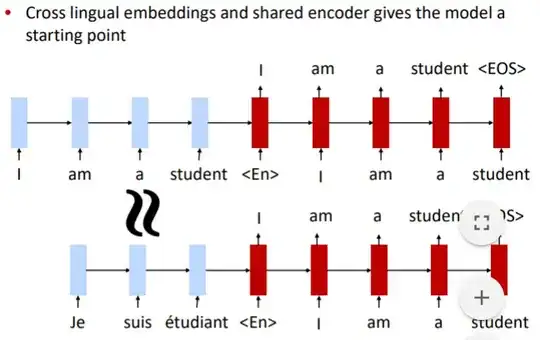

One other advanced method is to use the pre-training model. In this paper: Cross-lingual Language Model Pretraining researchers proposed an algorithm that utilized the pre-trained multi-lingual trained BERT(with labeled data but we don't need to have a labeled dataset for our task) and the autoencoder mentioned previously.

Lerner Zhang

- 877

- 1

- 7

- 19

-

That's crazy! I wonder if it could be a way of addressing the grounding problem too, if media experiences could somehow be mapped to a shared embedding space . . . – Neil Slater Feb 29 '20 at 10:17

-

@NeilSlater I know only a little about the "grounding problem" but I thought this paper would be helpful to you: https://arxiv.org/pdf/1609.05518.pdf – Lerner Zhang Feb 29 '20 at 10:27