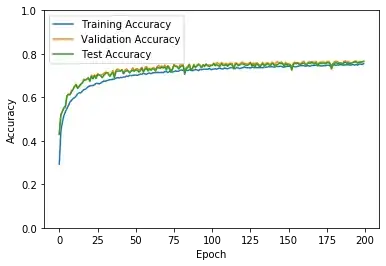

Is this due to my dropout layers being disabled during evaluation?

I'm classifying the CIFAR-10 dataset with a CNN using the Keras library.

There are 50000 samples in the training set; I'm using a 20% validation split for my training data (10000:40000). I have 10000 instances in the test set.