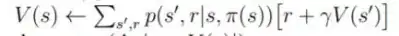

I'm actually trying to understand the policy iteration in the context of RL. I read an article presenting it and, at some point, a pseudo-code of the algorithm is given :

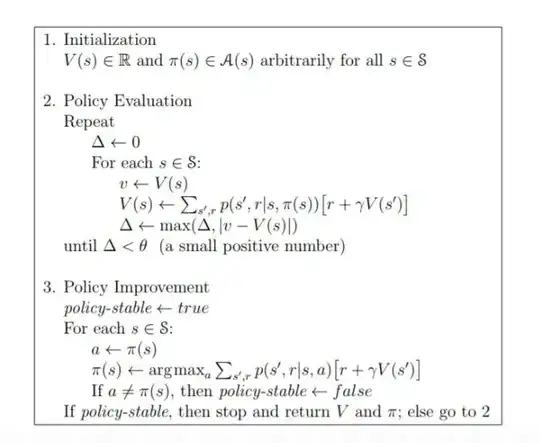

What I can't understand is this line :

From what I understand, policy iteration is a model-free algorithm, which means that it doesn't need to know the environment's dynamics. But, in this line, we need $p(s',r \mid s, \pi(s))$ (which in my understanding is the transition function of the MDP that gave us the probability of landing in the state $s'$ knowing previous $s$ state and the action taken) to compute $V(s)$. So I don't understand how we can compute $V(s)$ with the quantity $p(s',r \mid s, \pi(s))$ since it is a parameter of the environment.