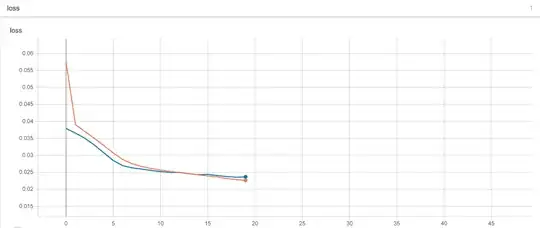

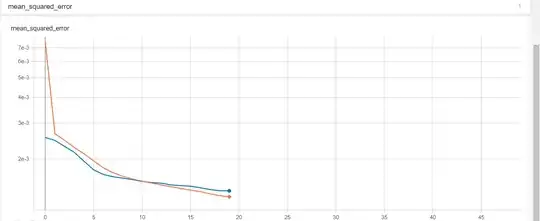

I was running my gated recurrent unit (GRU) model. I wanted to get an opinion if my loss and validation loss graph is good or not, since I'm new to this and don't really know if that is considered underfitting or not

2 Answers

When ever you are buliding a ML Model don't take accuracy seriously(Mistake done by Netflix that cost them alot), you should try to get the hit scores as they will help you to know how many times your model worked on real world users.However, if your model must have to measure the accuracy try it with the RMSE score as it will penalise you more for being more out of the Line. Here is the link for more information on it RMSE Its hard to predict if its overfitting or underfitting as your graph is vague(for example what does graph lines representing). However, you can solve underfitting by following steps: 1) Increase the size or number of parameters in the ML model. 2) Increase the complexity or type of the model. 3) Increasing the training time until cost function in ML is minimised.

For overfitting you can try Regularization methods like weight decay provide an easy way to control overfitting for large neural network models. A modern recommendation for regularization is to use early stopping with dropout and a weight constraint.

- 70

- 8

-

Thank you very much, this is some great info! I my model suffered from overfitting, I did a little bit of tweaking then it fitted well. I added L2 refularization, dropout, decreased number of layers and also reduced learning rate a little. – AliY Apr 12 '20 at 19:47

You should at least crop the plots and add a legend. Maybe also provide some scores (accuracy, auc, whatever you're using). Anyway, it doesn't look your model is underfitting, if it was you should have high error at both, training and test phase and the lines would not cross.

- 5,153

- 1

- 11

- 25

-

My final final MSE loss was 0.001371944 and validation MSE loss was 0.001448105. The Test scores were [0.030501108373429363, 0.00272163194425038]. Does crossing lines indicate that it is a good fit? – AliY Mar 13 '20 at 02:13

-

It's hard to say from a single plot. The best thing to do is always to perform several training of the same model, save history loss and MSE and then average them. In this way you get rid of some causal outcomes (it's not unlikely for example to have lower validation loss than training one with Keras, due to some batch averaging they defined). Anyway, your MSE is pretty low, I would be more concerned of overfitting but also the test one is low, which is good. Are you using some already made dataset from TensorFlow? – Edoardo Guerriero Mar 15 '20 at 23:22