Lately, I have implemented DQN for Atari Breakout. Here is the code:

https://github.com/JeremieGauthier/AI_Exercices/blob/master/Atari_Breakout/DQN_Breakout.py

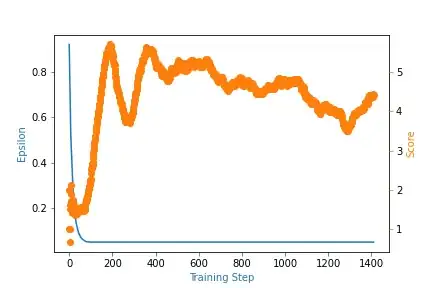

I have trained the agent for over 1500 episodes, but the training leveled off at around 5 as score. Could someone look at the code and point out a few things that should be corrected?

Actually, the training is not going more than 5 in average score. Is there a way to improve my performance?