This is also a question I stumble upon, thanks for the explaination from ted, it is very helpfull, I will try to elaborate a little bit. Let's still use DeepMind's Simon Osindero's slide:

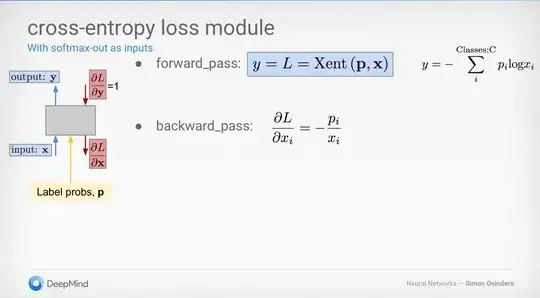

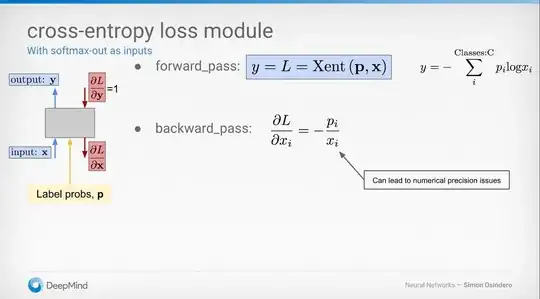

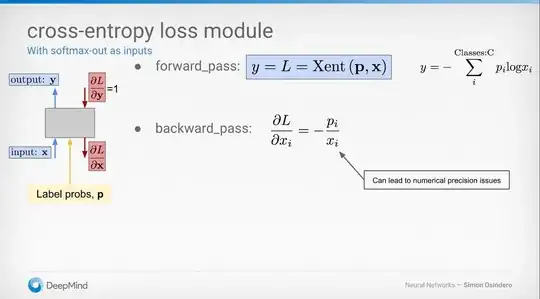

The grey block on the left we are looking at is only a cross entropy operation, the input $x$ (a vector) could be the softmax output from previous layer (not the input for the neutral network), and $y$ (a scalar) is the cross entropy result of $x$. To propagate the gradient back, we need to calculate the gradient of $dy/dx_i$, which is $-p_i/x_i$ for each element in $x$.

As we know the softmax function scale the logits into the range [0,1], so if in one training step, the neutral network becomes super confident and predict one of the probabilties $x_i$ to be 0 then we have a numerical problem in calculting $dy/dx_i$.

The grey block on the left we are looking at is only a cross entropy operation, the input $x$ (a vector) could be the softmax output from previous layer (not the input for the neutral network), and $y$ (a scalar) is the cross entropy result of $x$. To propagate the gradient back, we need to calculate the gradient of $dy/dx_i$, which is $-p_i/x_i$ for each element in $x$.

As we know the softmax function scale the logits into the range [0,1], so if in one training step, the neutral network becomes super confident and predict one of the probabilties $x_i$ to be 0 then we have a numerical problem in calculting $dy/dx_i$.

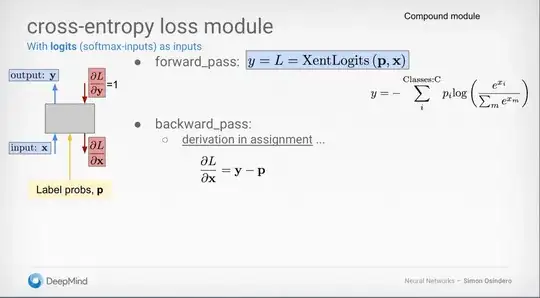

While in the other case where we take the logits and calculate the softmax and crossentropy at one shot (XentLogits function), we don't have this problem. Because the derivative of XentLogits is $dy/dx_i = y - p_i$, a more elaborated derivation can be found here.