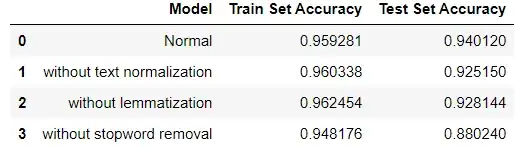

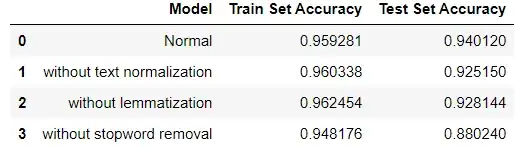

As far as I know, there are few aspects that would probably improve the model score:

- Normalization

- Lemmatization

- Stopwords removal (as you asked here)

Based on your question, "is removing top frequent words (stopwords) will improve the model score?". The answer is, it depends on what kind of stopwords are you removing. The problem here is that if you do not remove stop words, the noise will increase in the dataset because of words like I, my, me, etc. Here is the comparison of those three aspects using SVM Classifier.

You may see that without stopwords removal the Train Set Accuracy decreased to 94.81% and the Test Set Accuracy decreased to 88.02%. But, you should be careful about what kind of stopwords you are removing.

If you are working with basic NLP techniques like BOW, Count Vectorizer or TF-IDF(Term Frequency and Inverse Document Frequency) then removing stopwords is a good idea because stopwords act like noise for these methods. If you working with LSTM’s or other models which capture the semantic meaning and the meaning of a word depends on the context of the previous text, then it becomes important not to remove stopwords.

So, what's the solution?

You may want to create a Python package nlppreprocess which removes stops words that are not necessary. It also has some additional functionalities that can make cleaning of text fast. For example:

from nlppreprocess import NLP

import pandas as pd

nlp = NLP()

df = pd.read_csv('some_file.csv')

df['text'] = df['text'].apply(nlp.process)

Source:

https://github.com/miguelfzafra/Latest-News-Classifier

https://towardsdatascience.com/why-you-should-avoid-removing-stopwords-aa7a353d2a52