I'm trying to train a neural net to choose a subset from some list of objects. The input is a list of objects $(a,b,c,d,e,f)$ and for each list of objects the label is a list composed of 0/1 - 1 for every object that is in the subset, for example $(1,1,0,1,0,1)$ represents choosing $a,b,d,f$. I thought about using MSE loss to train the net but that seemed like a naive approach, is there some better loss function to use in this case?

1 Answers

The choice of the loss function depends primarily on the type of task you're tackling: classification or regression. Your problem is clearly a classification one since you have classes to which a given input can either belong or not. More specifically, what you're trying to do is multi-label classification, which is different from multi-class classification. The difference is important to stress out and it consists in the format of the target labels.

# Multi-class --> one-hot encoded labels, only 1 label is correct

[1,0,0], [0,1,0], [0,0,1]

# Multi-label --> multiple labels can be correct

[1,0,0], [1,1,0], [1,1,1], [0,1,0], [0,1,1], [0,0,1]

MSE is used when continuous values are predicted for some given inputs, therefore it belongs to the loss functions suitable for regression and it should not be used for your problem.

Two loss functions that you could apply are Categorical Cross Entropy or Binary Cross Entropy. Despite being both based on cross-entropy, there is an important difference between them, consisting of the activation function they require.

Binary Cross Entropy

$$L(y, \hat{y})=-\frac{1}{N} \sum_{i=0}^{N}\left(y * \log \left(\hat{y}_{i}\right)+(1-y) * \log \left(1-\hat{y}_{i}\right)\right)$$

Despite the name that suggests this loss should be used only for binary classification, this is not strictly true and actually, this is the loss function that conceptually is best suited for multi-label tasks.

Let's start with the binary classification case. We have a model that returns a single output score, to which the sigmoid function is applied in order to constrain the value between 0 and 1. Since we have a single score, the resulting value can be interpreted as a probability of belonging to one of the two classes, and the probability of being to the other class can be computed as 1 - value.

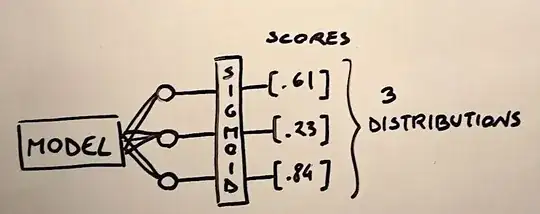

What if we have multiple output scores, for example, 3 nodes for 3 classes? In this case, we still could apply the sigmoid function, ending up with three scores between 0 and 1.

The important aspect to capture is that since the sigmoid treat each output node independently, the 3 scores would not sum up to 1, so they will represent 3 different probability distributions rather than a unique one. This means that each score after the sigmoid represents a distinct probability of belonging to that specific class. In the example above, for example, the prediction would be true for the two labels with a score higher than 0.5 and false for the remaining label. This also means that 3 different loss have to be computed, one for each possible output. In practice, what you'll be doing is to solve n binary classification problems where n is the number of possible labels.

Categorical Cross Entropy

$$L(y, \hat{y})=-\sum_{j=0}^{M} \sum_{i=0}^{N}\left(y_{i j} * \log \left(\hat{y}_{i j}\right)\right)$$

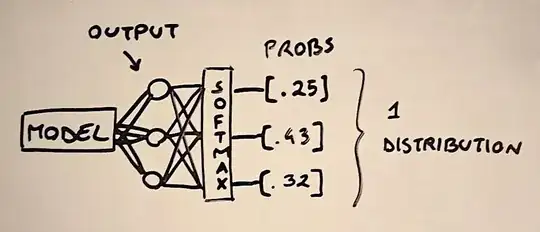

In categorical cross-entropy we apply the softmax function to the output scores of our model, to constrain them between 0 and 1 and to turn them into a probability distribution (they all sum to 1). The important thing to notice is that in this case, we end up with a unique distribution because, unlike the sigmoid function, the softmax consider all output scores together (they are summed in the denominator).

This implies that categorical cross-entropy is best suited for multi-class tasks, in which we end up with a single true prediction for each input instance. Nevertheless, this loss can also be applied also for multi-label tasks, as done in this paper. To do so, the authors turned each target vector into a uniform probability distribution, which means that the values of the true labels are not 1 but 1/k, with k being the total number of true labels.

# Example of target vector tuned into uniform probability distribution

[0, 1, 1] --> [0, .5, .5]

[1, 1, 1] --> [.33, .33, .33]

Note also that in the above-mentioned paper the authors found that categorical cross-entropy outperformed binary cross-entropy, even this is not a result that holds in general.

Lastly, the are other loss that you could try which differ functions rather than cross-entropy, for example:

Hamming-Loss

It computes the fraction of wrong predicted labels

$$\frac{1}{|N| \cdot|L|} \sum_{i=1}^{|N|} \sum_{j=1}^{|L|} \operatorname{xor}\left(y_{i, j}, z_{i, j}\right)$$

Exact Match Ratio

Only predictions for which all target labels were correctly classified are considered correct.

$$ExactMatchRatio,\space M R=\frac{1}{n} \sum_{i=1}^{n} I\left(Y_{i}=Z_{i}\right)$$

- 1,074

- 1

- 6

- 30

- 5,153

- 1

- 11

- 25

-

I think I may have been unclear. Each instance has only one desired subset. How is it a multi-label problem? – Gilad Deutsch Apr 22 '20 at 14:55

-

@GiladDeutch So basically you're dealing with unique tuples of 6 elements (indices) for each input list? In this case the problem is simply multi-class classification and I would go for categorical cross-entropy. I would also change the representation of the target label in order to be hot-encoded. So if you have n possible tuples combination I would turn (1,0,0,0,0,0) into [1,0,..0] (length=n), then (1,1,0,0,0,0) into [0,1,0,..0] (length=n) and so on. – Edoardo Guerriero Apr 22 '20 at 15:05

-

I'm not sure you follow. The 1's represent items from the list we want to choose: (1,1,0,0,0,0) would mean choosing the first two elements from the list, [0,1,0,..0] would mean choosing only the second, they're not the same. – Gilad Deutsch Apr 22 '20 at 19:05

-

@GiladDeutch I do follow. I'll try to explain in a different way. First of all, you can use categorical cross entropy, it should work. Nevertheless I would **recode** the labels you currently have using **one hot encoding**. This means that you will transform each tuple containing 6 binary labels into a vector of size **n**, i.e. the total number of unique tuples in your dataset. So again, taking for example (1,1,0,0,0,0), it would be converted in a vector of length n containing a unique 1 value, such as [0,1,0,..n]. It would be just a mapping between tuples and one-hot encoded vectors. – Edoardo Guerriero Apr 22 '20 at 19:21

-

The reason why I would do that is in the answer I wrote. Categorical cross entropy is meant to be used when only a single output node is suppose to be activated. If you use a tuple like (1,1,0,0,0,0) to calculate the error, you would train the model to maximise the output of the first 2 output nodes, which is ok but impractical. If you recode the tuples and train the model representing the tuples with one hot encoding instead, you would need **n** output nodes, and the model would learn to maximise the score of only one node, associated to a hot-encoding of one of the possible tuples. – Edoardo Guerriero Apr 22 '20 at 19:27

-

if it is still not clear I can add a sketch to the answer. – Edoardo Guerriero Apr 22 '20 at 19:27

-

Ok, I think I understand. But if I have subsets of a set of size 6 for example then the number of possible labels is the amount of possible subsets: n=2^6=64. So, one hot encoding would signifanctly enlarge the dimensions of the architecture. What you're saying is categorical cross entropy would work with the labels as they are, but it wouldn't be ideal? – Gilad Deutsch Apr 22 '20 at 20:28

-

@GiladDeutch because as I explained in the answer, categorical cross entropy use the softmax, which turn all output scores into a unique prob distribution. This means that only one node is supposed to have the highest probability. Without one-hot labels there would be cases like (1,1,1,1,0,0) in which the model would be forced to predict a similar probability for 4 out of 6 nodes, so in the best case it will learn to predict .25 .25 .25 .25 0 0. which are not really high scores. With one hot encoding, you would allow the model to maximise a single output node per possible labels combination. – Edoardo Guerriero Apr 22 '20 at 20:43

-

Nevertheless, if your dataset is not really large or you have other issue that prohibit one.hot encoding, you might try the approach used in the paper I linked. It would be like treating the problem as a multi-label task instead of a multi-class, but considering the format of your labels it will be conceptually the same, so I expect it to work. – Edoardo Guerriero Apr 22 '20 at 20:45

-

I see your point, but it seems like using MSE loss and just taking each element with an output of more than 0.5 would much simpler. or if the subset size is known and is k, then just take the k elements with the highest outputs. Would that be a terrible solution in your opinion? – Gilad Deutsch Apr 22 '20 at 23:57

-

@GiladDeutch The problems I see in using MSE is that the main purpose of it is to work with real values rather than binary ones. The MSE is a derivation of a linear regression, it assume the output variable to be a combination of the input values plus gaussian noise with constant mean and variance. If you use it in classification the residual will assume discrete values, in your case they would be 0, 1, 4, 9, 16, 25 or 36 and this why usually MSE is not used in classification. But of course in practice no one will stop you from trying! – Edoardo Guerriero Apr 23 '20 at 00:47

-

I see. What about the hamming and exact match ratio losses? I never heard of them but they seem like they could fit – Gilad Deutsch Apr 23 '20 at 10:59

-

@GiladDeutch if you use the hamming or EMR loss with your actually label format you'll be performing multi-label classification. The hamming loss for example would return an error of 2 for the two single vector [0,1,0,0] and [1,1,1,0]. If you treat them as classes the error will become 1, just because they are not equal. To be honest I do agree that one-hot encoding might be a pain in the ass and I think that treating the problem as multi-label is the simplest way to go. Even though you have unique combinations, this would be just an added helpful property of your dataset. – Edoardo Guerriero Apr 23 '20 at 12:24

-

Just a comment which is going to explain: I appreciate this answer; I had a kind of like question before and this totally cleared it up; Thanks! – William Martens Dec 27 '21 at 15:46