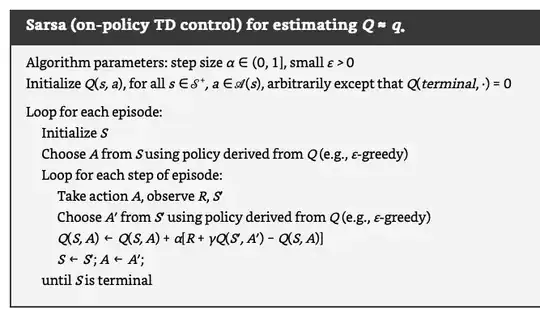

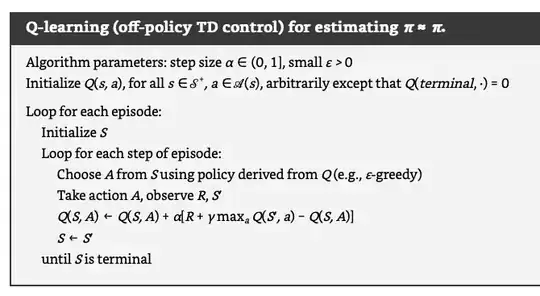

From Sutton and Barto's book Reinforcement Learning (Adaptive Computation and Machine Learning series), are the following definitions:

To aid my learning of RL and gain an intuition, I'm focusing on the differences between some algorithms. I've selected Sarsa (on-policy TD control) for estimating Q ≈ q * and Q-learning (off-policy TD control) for estimating π ≈ π *.

For conciseness I'll refer to Sarsa (on-policy TD control) for estimating Q ≈ q * and Q-learning (off-policy TD control) for estimating π ≈ π * as Sarsa and Q-learning respectively.

Are my following assertions correct?

The primary differences are how the Q values are updated.

Sarsa Q value update: $ Q ( S, A ) ← Q ( S, A ) + α [ R + \gamma Q ( S ′ , A ′ ) − Q ( S, A ) ] $

Q-learning Q value update: $ Q ( S, A ) ← Q ( S, A ) + α [ R + \gamma \max_a Q ( S ′ , a ) − Q ( S, A ) ] $

Sarsa, in performing the td update subtracts the discounted Q value of the next state and action, S', A' from the Q value of the current state and action S, A. Q-learning, on the other hand, takes the discounted difference between the max action value for the Q value of the next state and current action S', a. Within the Q-learning episode loop the $a$ value is not updated, is an update made to $a$ during Q-learning?

Sarsa, unlike Q-learning, the current action is assigned to the next action at the end of each episode step. Q-learning does not assign the current action to the next action at the end of each episode step

Sarsa, unlike Q-learning, does not include the arg max as part of the update to Q value.

Sarsa and Q learning in choosing the initial action for each episode both use a "policy derived from Q", as an example, the epsilon greedy policy is given in the algorithm definition. But any policy could be used here instead of epsilon greedy? Q learning does not utilise the next state-action pair in performing the td update, it just utilises the next state and current action, this is given in the algorithm definition as $ Q ( S ′ , a ) $ what is $a$ in this case ?