Weak supervision is supervised learning, with uncertainty in the labeling, e.g. due to automatic labeling or because non-experts labelled the data [1].

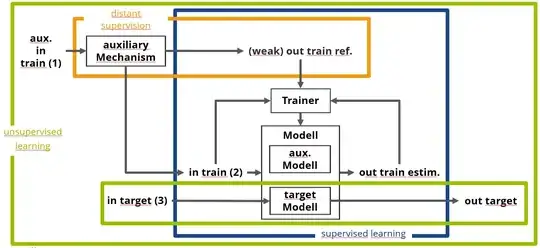

Distant supervision [2, 3] is a type of weak supervision that uses an auxiliary automatic mechanism to produce weak labels / reference output (in contrast to non-expert human labelers).

According to this answer

Self-supervised learning (or self-supervision) is a supervised learning technique where the training data is automatically labelled.

In the examples for self-supervised learning, I have seen so far, the labels were extracted from the input data.

What is the difference between distant supervision and self-supervision?

- Is it that for self-supervision, the labels must come from the input data and for distant supervision it can come from anywhere (which would make self-supervision a type of distant supervision)?

- Or must the labels from distant supervision come from somewhere else than the input data?

- If "In robotics, this can be done by finding and exploiting the relations or correlations between inputs coming from different sensor modalities." then for self-supervised learning, the labels do not even have to originate from the input data. (Or did I misinterpret the quote?)

(Setup mentioned in discussion: