In a course that I am attending, the cost function of a support vector machine is given by

$$J(\theta)=\sum_{i=1}^{m} y^{(i)} \operatorname{cost}_{1}\left(\theta^{T} x^{(i)}\right)+\left(1-y^{(i)}\right) \operatorname{cost}_{0}\left(\theta^{T} x^{(i)}\right)+\frac{\lambda}{2} \sum_{j=1}^{n} \Theta_{j}^{2}$$

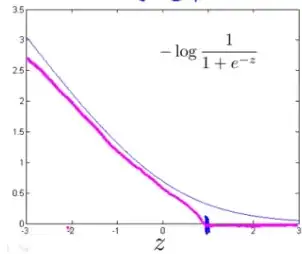

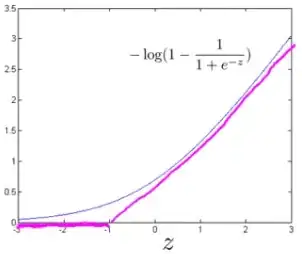

where $\operatorname{cost}_{1}$ and $\operatorname{cost}_{0}$ look like this (in Magenta):

What are the values of the functions $\operatorname{cost}_{1}$ and $\operatorname{cost}_{0}$?

For example, if using logistic regression the values of $\operatorname{cost}_{1}$ and $\operatorname{cost}_{0}$ would be $-\log* \operatorname{sigmoid}(-z)$ and $-\log*(1-\operatorname{sigmoid}(-z))$.