I am a student learning machine learning recently, and one thing is keep confusing me, I tried multiple sources and failed to find the related answer.

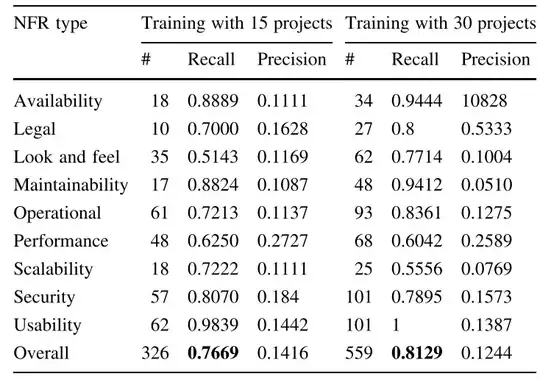

As following table shows (this is from some paper):

Is it possible that every class has a higher recall than precision for multi-class classification?

Recall can be higher than precision over some class or overall performance which is common, but is it possible to keep recall greater than precision for every class?

The total amount of test data is fixed, so, to my understanding, if the recall is greater than the precision for one class, it is a must that the recall must be smaller than the precision for some other classes.

I tried to make a fake confusion matrix to simulate the result, but I failed. Can someone explain it to me?

this is a further description:

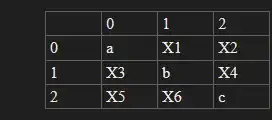

Assume we have classified 10 data into 3 classes, and we have a confusion matrix like this,

if we want to keep recall bigger than precision over each class (this case 0,1,2) respectively, we need to keep:

x1+x2 < x3+x5

x3+x4 < x1+x6

x5+x6 < x2+x4

There is a conflict, because the sum of the left side equals to the sum of the right side in these inequalities, and the sum(x1...x6) = 10 - sum(a,b,c) in this case.

Hence, I think to get recall higher than precision on all classes is not feasible, because the quantity of the total classification is fixed.

I don't know am I right or wrong, please tell me if I made a mistake.