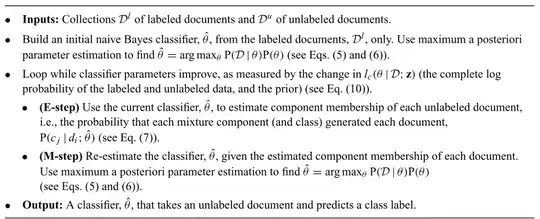

I am learning the expectation-maximization algorithm from the article Semi-Supervised Text Classification Using EM. The algorithm is very interesting. However, the algorithm looks like doing a circular inference here.

I don't know am I understanding the description right or wrong, what I perceived is:

Step 1: train NB classifier on labeled data.

Repeat

Step 2 (E-step): use trained NB to add label to unlabeled data.

Step 3 (M-step): train NB classifier by using labeled data and unlabeled data(with tags from step 2) to get a new classifier

Until convergent.

Here is the question:

In step 2, the label is tagged by the classifier trained on the labeled data, which is the only source containing the knowledge on a correct prediction. And the step-3 (M-step) is actually updating the classifier on the labels generated from step 2. The whole process is relying on the labeled data, so how can the EM classifier improve the classification? Can someone explain it to me?