In this blog post: http://www.argmin.net/2016/04/18/bottoming-out/

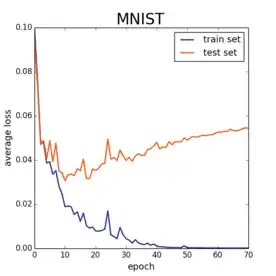

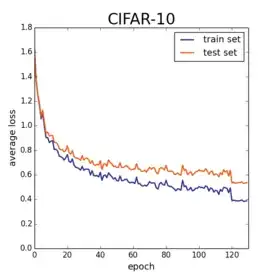

Prof Recht shows two plots:

He says one of the reasons the plot below has a lower train-test gap is because that model was trained with a lower learning rate (and he also manually drops the learning rate at 120 epoch).

Why would a lower learning rate reduce overfitting?