In this article I am reading:

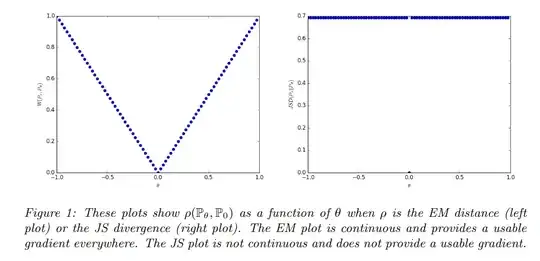

$D_{KL}$ gives us inifity when two distributions are disjoint. The value of $D_{JS}$ has sudden jump, not differentiable at $\theta=0$. Only Wasserstein metric provides a smooth measure, which is super helpful for a stable learning process using gradient descents.

Why is this important for a stable learning process? I have also the feeling this is also the reason for mode collapse in GANs, but I am not sure.

The Wasserstein GAN paper also talks about it obviously, but I think I am missing a point. Does it say JS does not provide a usable gradient? What exactly does that mean?