I am training an agent to do object avoidance. The agent has control over its steering angle and its speed. The steering angle and speed are normalized in a $[−1,1]$ range, where the sign encodes direction (i.e. a speed of −1 means that it is going backwards at the maximum units/second).

My reward function penalises the agent for colliding with an obstacle and rewards it for moving away from its starting position. At a time $t$, the reward, $R_t$, is defined as $$ R_t= \begin{cases} r_{\text{collision}},&\text{if collides,}\\ \lambda^d\left(\|\mathbf{p}^{x,y}_t-\mathbf{p}_0^{x,y}\|_2-\|\mathbf{p}_{t-1}^{x,y}-\mathbf{p}_0^{x,y}\|_2 \right),&\text{otherwise,} \end{cases} $$ where $\lambda_d$ is a scaling factor and $\mathbf{p}_t$ gives the pose of the agent at a time $t$. The idea being that we should reward the agent for moving away from the inital position (and in a sense 'exploring' the map—I'm not sure if this is a good way of incentivizing exploration but I digress).

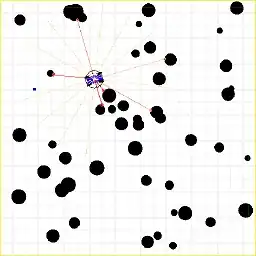

My environment is an unknown two-dimensional map that contains circular obstacles (with varying radii). And the agent is equipped with a sensor that measures the distance to nearby obstacles (similar to a 2D LiDAR sensor). The figure below shows the environment along with the agent.

Since I'm trying to model a car, I want the agent to be able to go forward and reverse; however, when training, the agent's movement is very jerky. It quickly switches between going forward (positive speed) and reversing (negative speed). This is what I'm talking about.

One idea I had was to penalise the agent when it reverses. While that did significantly reduce the jittery behaviour, it also caused the agent to collide into obstacles on purpose. In fact, over time, the average episode length decreased. I think this is the agent's response to the reverse penalties. Negative rewards incentivize the agent to reach a terminal point as fast as possible. In our case, the only terminal point is obstacle collision.

So then I tried rewarding the agent for going forward instead of penalising it for reversing, but that did not seem to do much. Evidently, I don't think trying to correct the jerky behaviour directly through rewards is the proper approach. But I'm also not sure how I can do it any other way. Maybe I just need to rethink what my reward signal wants the agent to achieve?

How can I rework the reward function to have the agent move around the map, covering as much distance as possible, while also maintaining smooth movement?