I am trying to train a DDPG agent augmented with Hindsight Experience Replay (HER) to solve the KukaGymEnv environment. The actor and critic are simple neural networks with two hidden layers (as in the HER paper).

More precisely, the hyper-parameters I am using are

- The actor's hidden layer's sizes: [256, 128] (using ReLU activations and a tanh activation after the last layer)

- Critic's hidden layer's sizes: [256, 128] (Using ReLU activations)

- Maximum Replay buffer size: 50000

- Actor learning rate: 0.000005 (Adam Optimizer)

- Critic learning rate: 0.00005 (Adam Optimizer)

- Discount rate: 0.99

- Polyak constant : 0.001

- The transitions are sampled in batches of 32 from the replay buffer for training

- Update rate: 1 (target networks are updated after each time step)

- The action selection is stochastic with the noise being sampled from a normal distribution of mean 0 and standard deviation of 0.7

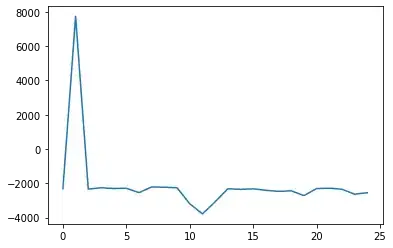

I trained the agent for 25 episodes with a maximum of 700 time-steps each and got the following reward plot:

The reward shoots up to a very high value of about 8000 for the second episode and steeply falls to -2000 in the very next time step, never to rise again. What could be the reason for this behavior and how can I get it to converge?

PS : One difference I observed while training this agent from while training a simple DDPG agent is that for simple DDPG, the episode would usually terminate at around 450 time-steps, thus never reaching the maximum specified timesteps. However, here, no episode terminated before the specified 700 maximum time steps. This might have something to do with the performance.