I have not found a lot of information on this, but I am wondering if there is a standard way to apply the outputs of a Bert model being used for sentiment analysis, and connect them back to the initial tokenized string of words, to gain an understanding of which words impacted the outcome of the sentiment most.

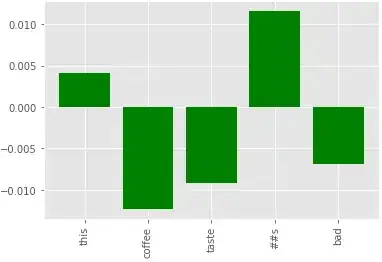

For example, the string "this coffee tastes bad" outputs a negative sentiment. Is it possible to analyze the output of the hidden layers to then tie those results back to each token to gain an understanding of which words in the sentence had the most influence on the negative sentiment?

The below chart is a result at my attempt to explore this, however I am not sure it makes sense and I do not think I am interpreting it correctly. I am basically taking the outputs of the last hidden layer, which in this case has shape (1, 7, 768), [CLS] + 5 word tokens + [SEP], and looping through each token summing up their values (768) and computing the average. The resulting totals are outputted in the below graph.

Any thoughts around if there is any meaning to this or if i am way off on approach, would be appreciated. Might be my misunderstanding around the actual output values themselves.

Hopefully this is enough to give someone the idea of what i am trying to do and how each word can be connected to positive or negative associations that contributed to the final classification.